Project

Helping robots learn using demonstrations

Machine learning can help robots learn new skills by finding patterns in data, but it can take a lot of time and examples for them to do something seemingly simple such as grasping. If we want robots to be effective teammates that can help increase our productivity, efficiency, and even quality of life, then we need them to continuously learn complex tasks reliably and quickly. What if we could give them a hand when they need it, so they could learn more effectively?

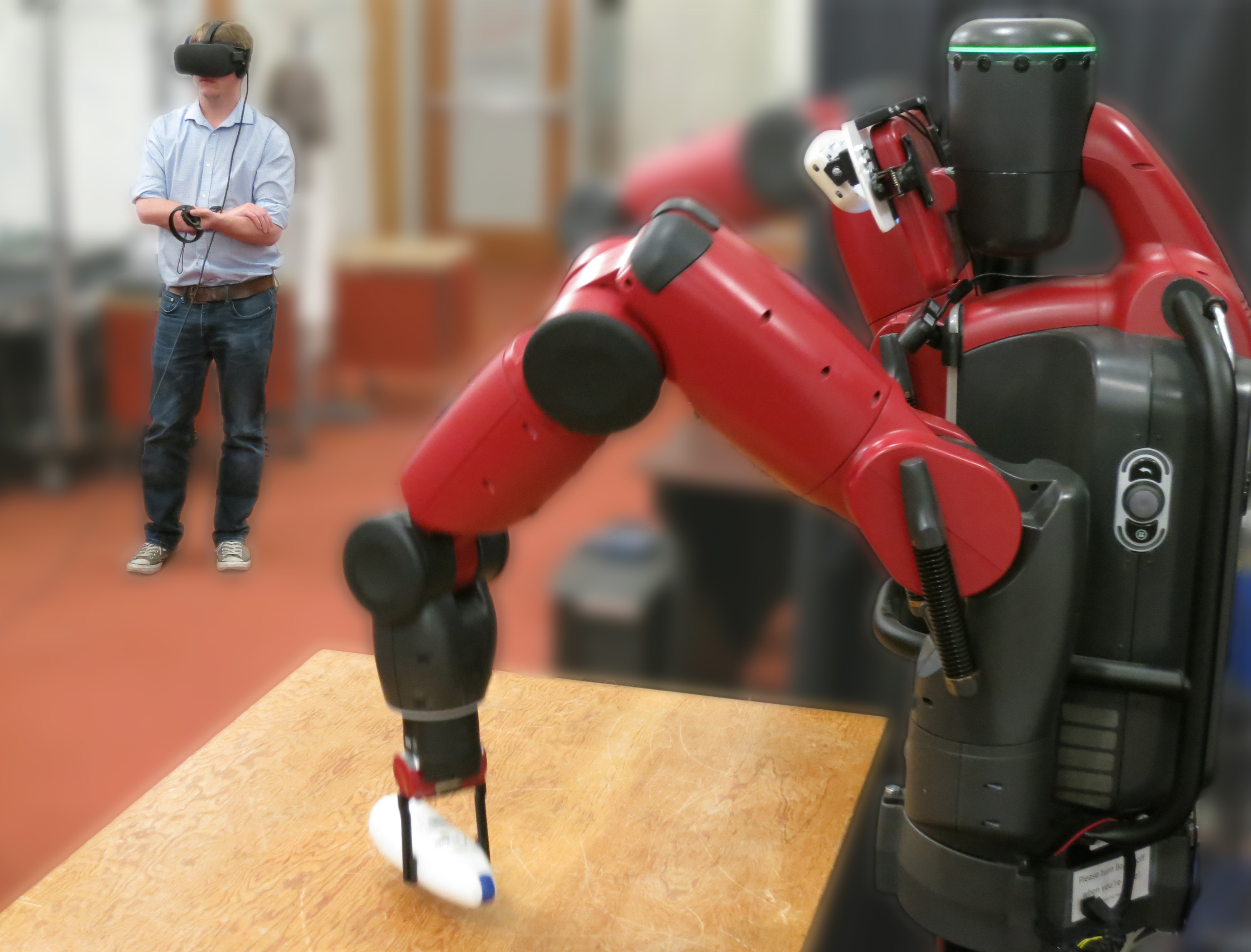

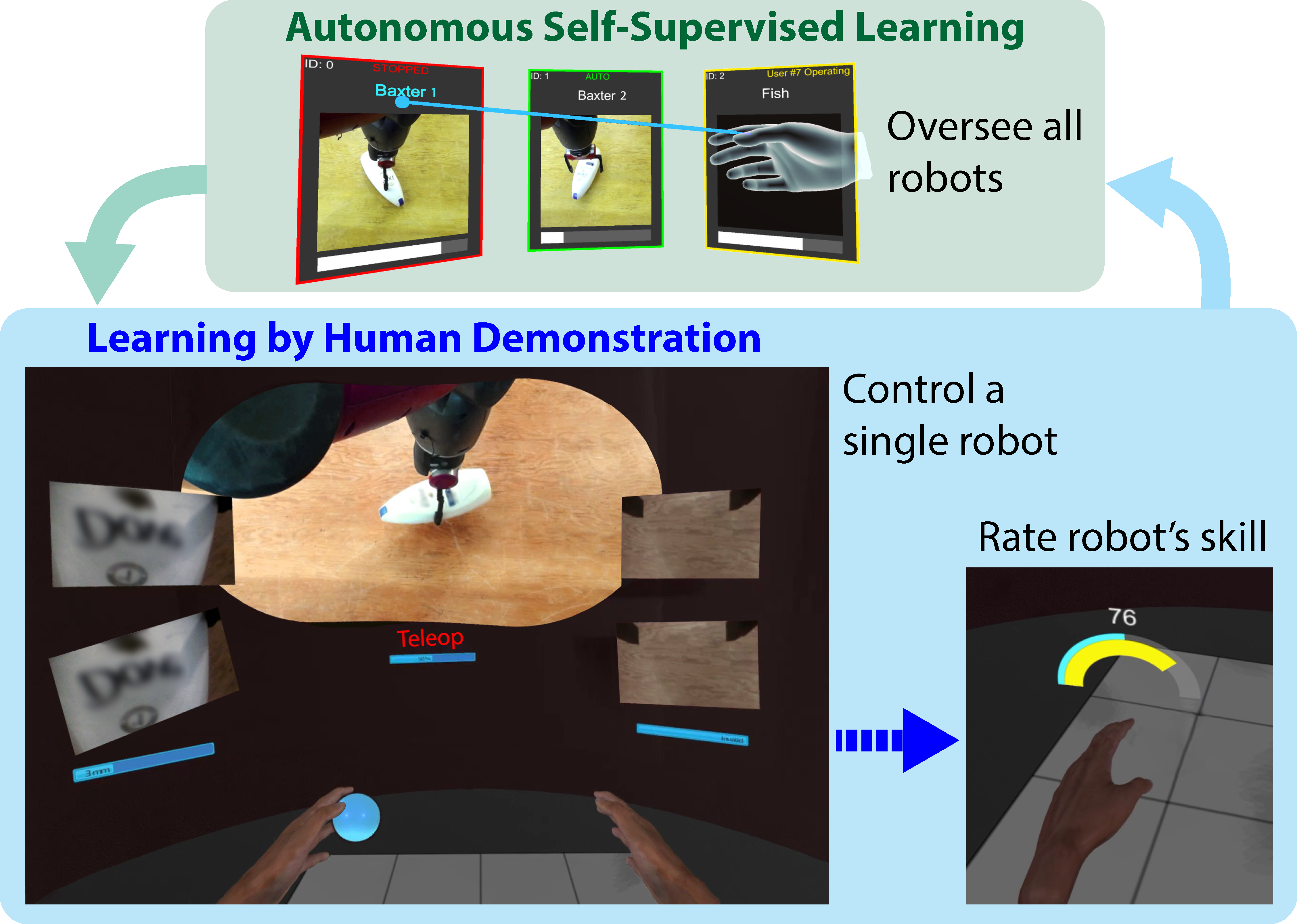

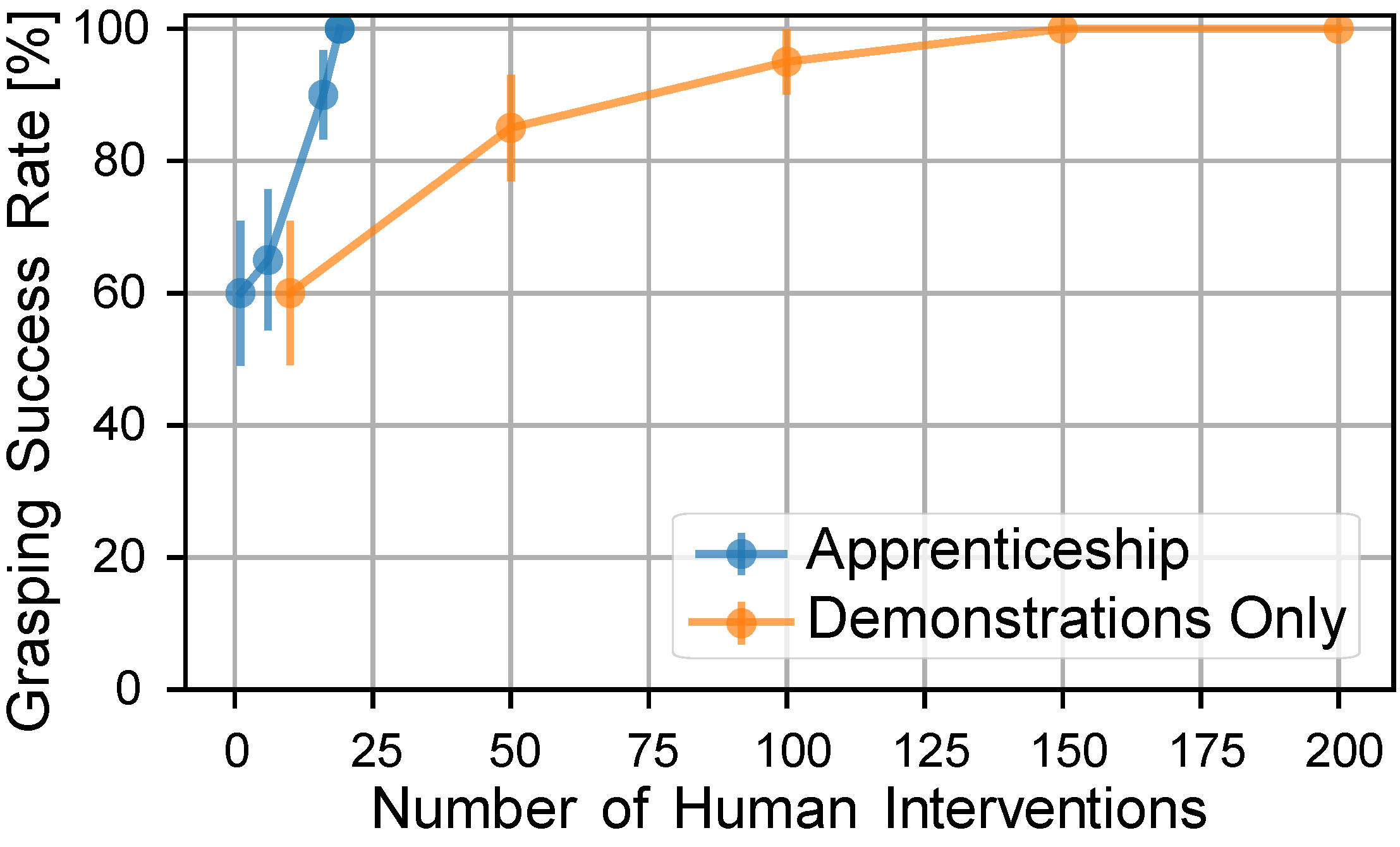

This project explores a master-apprentice model of learning that combines self-supervision with learning by demonstration. A robot learns to grasp on its own by repeatedly trying to pick up a bottle. But if it can't find a good grasp using its current model, it asks a person for help. The person is supervising the robot in a virtual reality (VR) control room, and they can take control of the robot to provide grasping demonstrations. The robot learns from these demonstrations, and then continues learning on its own. This allows the robot to learn faster and keeps the person's workload reasonable.

The person only needs to provide demonstrations when the robot can't do the task on its own, and they only need to provide examples of successful grasps - the robot finds its own examples of failed grasps. This team structure allows the robot to learn faster by strategically leveraging human experience in the continuous learning process.

|

|

|

|

|

Images by Joseph DelPreto, MIT CSAIL

|

|

Experiments and Results

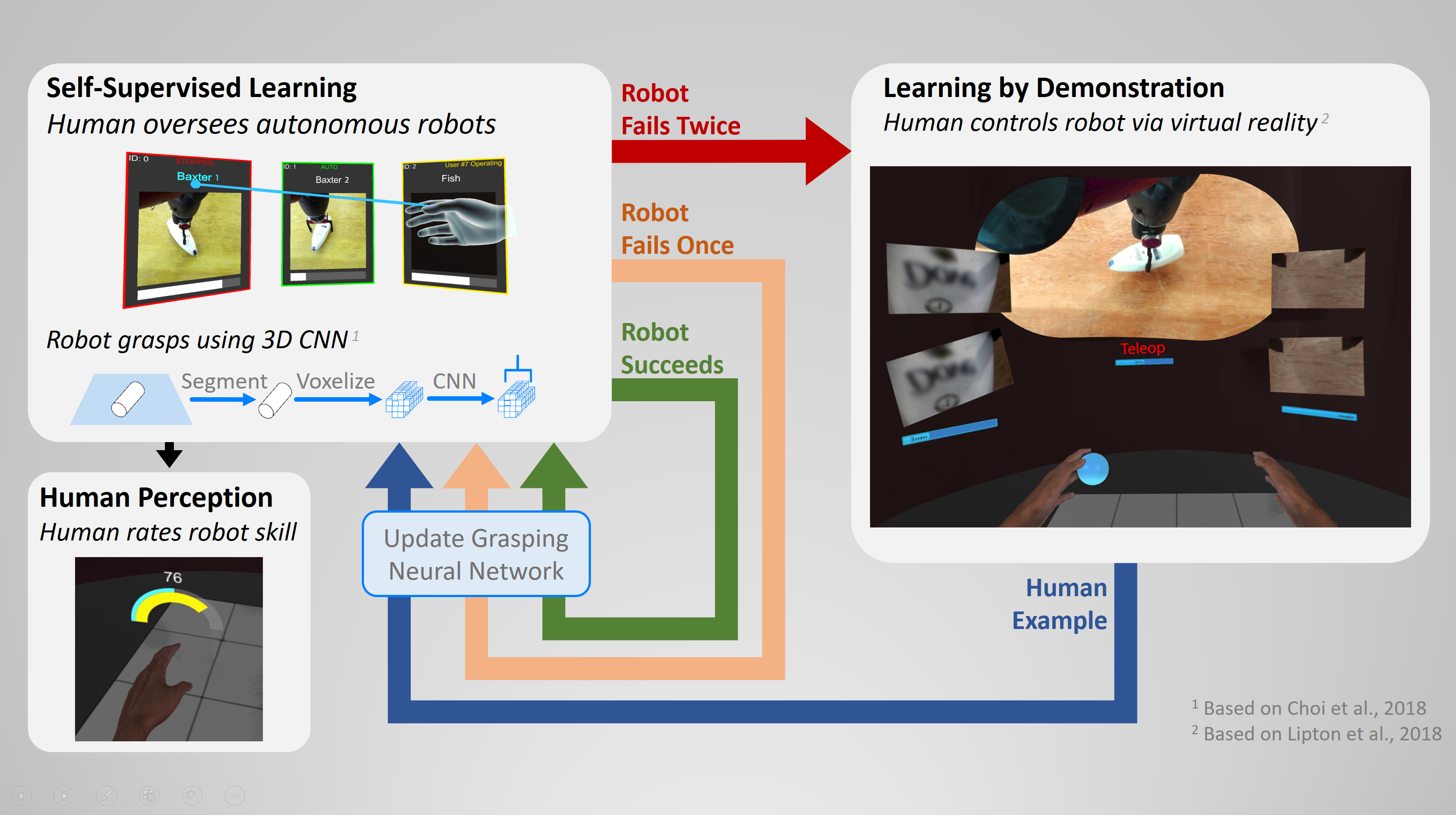

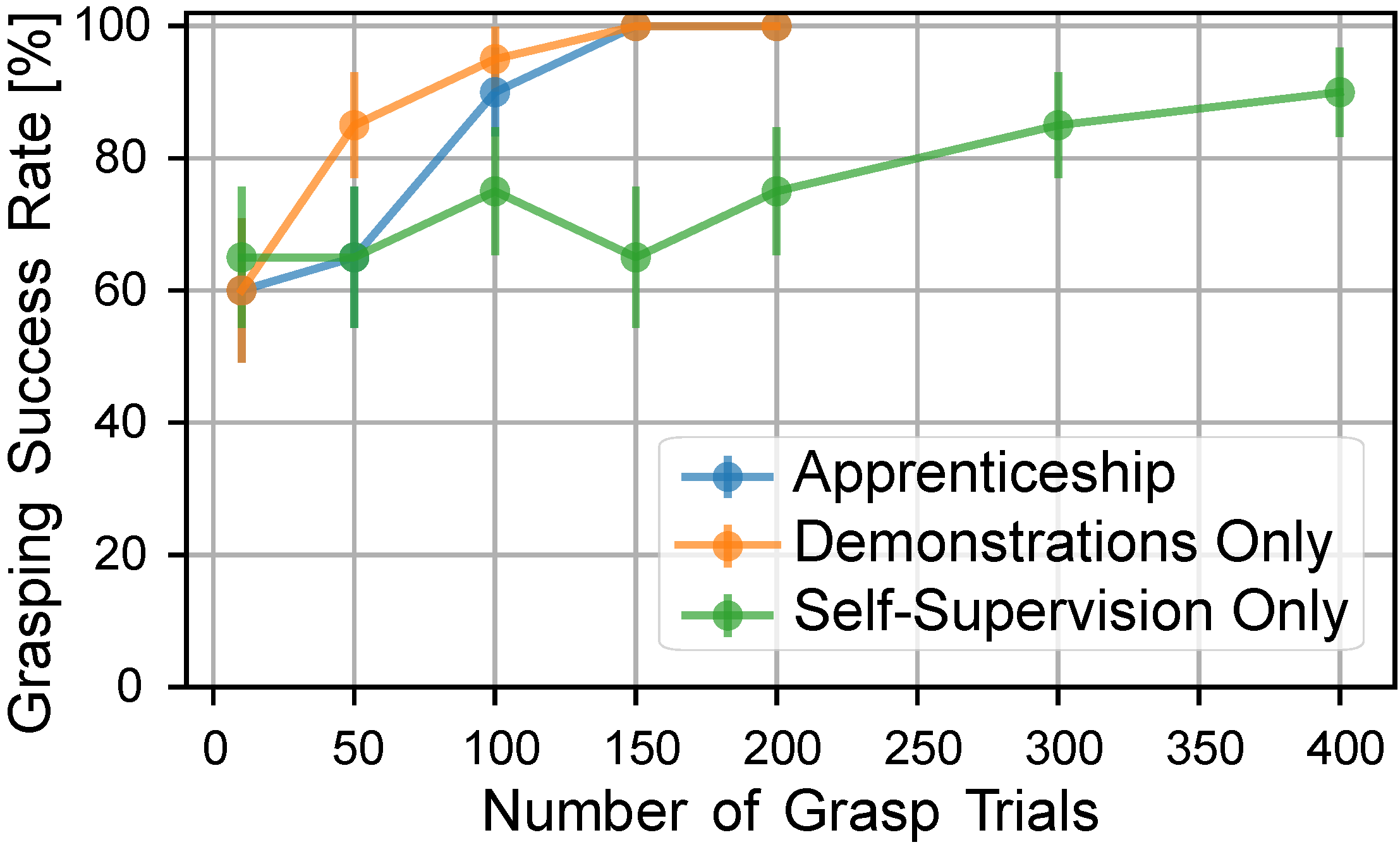

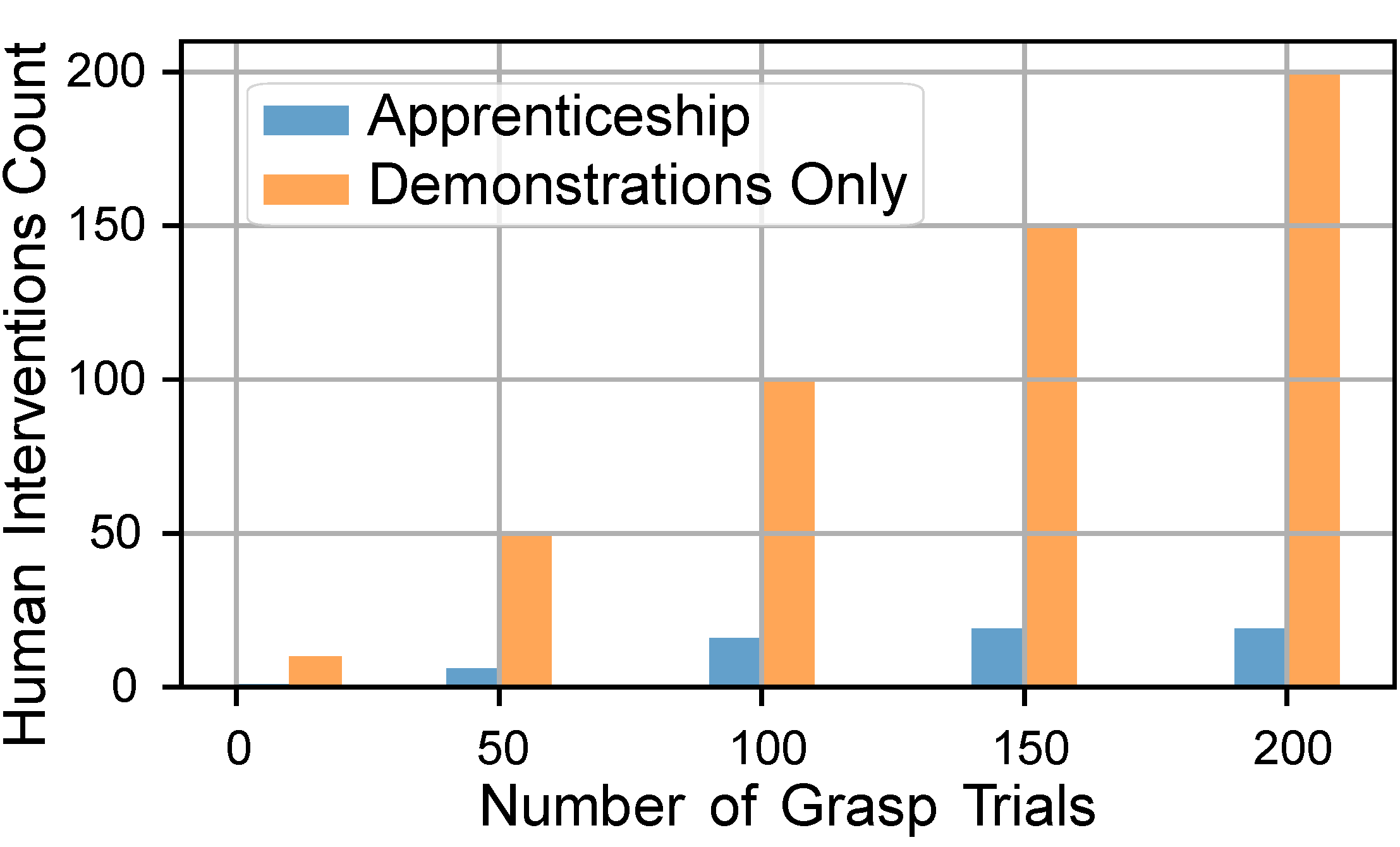

Experiments compared the apprenticeship model to learning by self-supervision (the robot learns completely on its own) and to learning completely by demonstration (the person controls the robot for every example). Results indicate that the robot learned faster when demonstrations were included; it learned a model with 100% grasping success after 150 grasps. Learning purely by demonstration was the fastest, but it required a lot of human involvement - the person provided all 150 grasp examples. The apprenticeship model, however, only required 19 human interventions to reach the same accuracy. So overall, the apprenticeship model blended the learning benefits of demonstrations with the reduced human workload of self-supervision.

|

|

|

| The apprentice model achieves more accurate grasping rates than pure self-supervision while using fewer examples, and matches the perfect success rate of pure learning by demonstration while requiring fewer human interventions. It reaches 100% grasping success after 150 trials and 19 human demonstrations. | ||

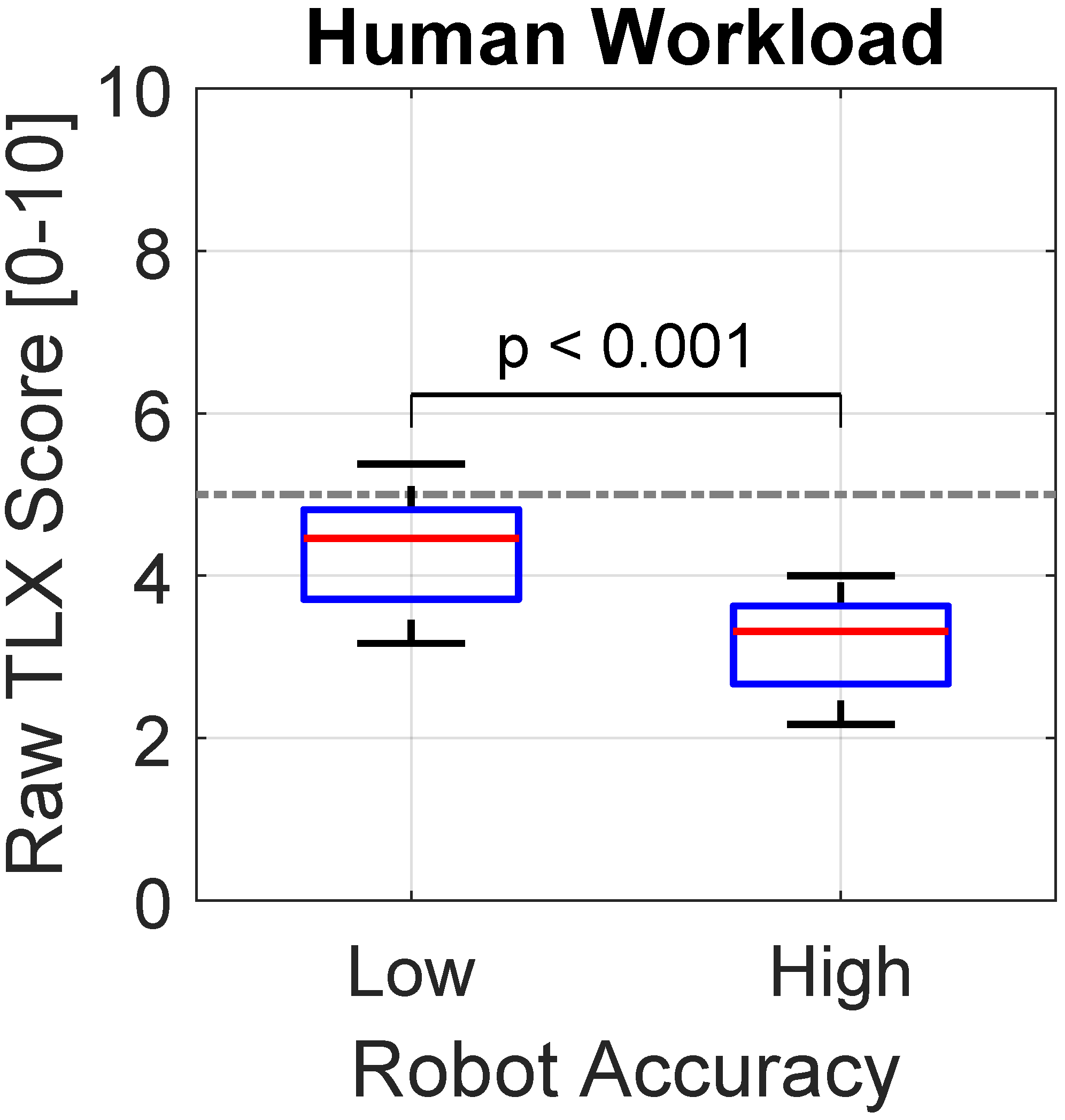

User studies suggest that people perceived the workload as reasonable, and that they perceived a decreased workload once the robot learned and requested fewer demonstrations. They also rated the system as relatively easy to learn and natural to use. Together, these results are promising for scaling the framework to a single user supervising multiple robots in the future.

|

| Users perceived a reasonable workload, and they noticed it decrease when the robot learned a more accurate grasping model. |

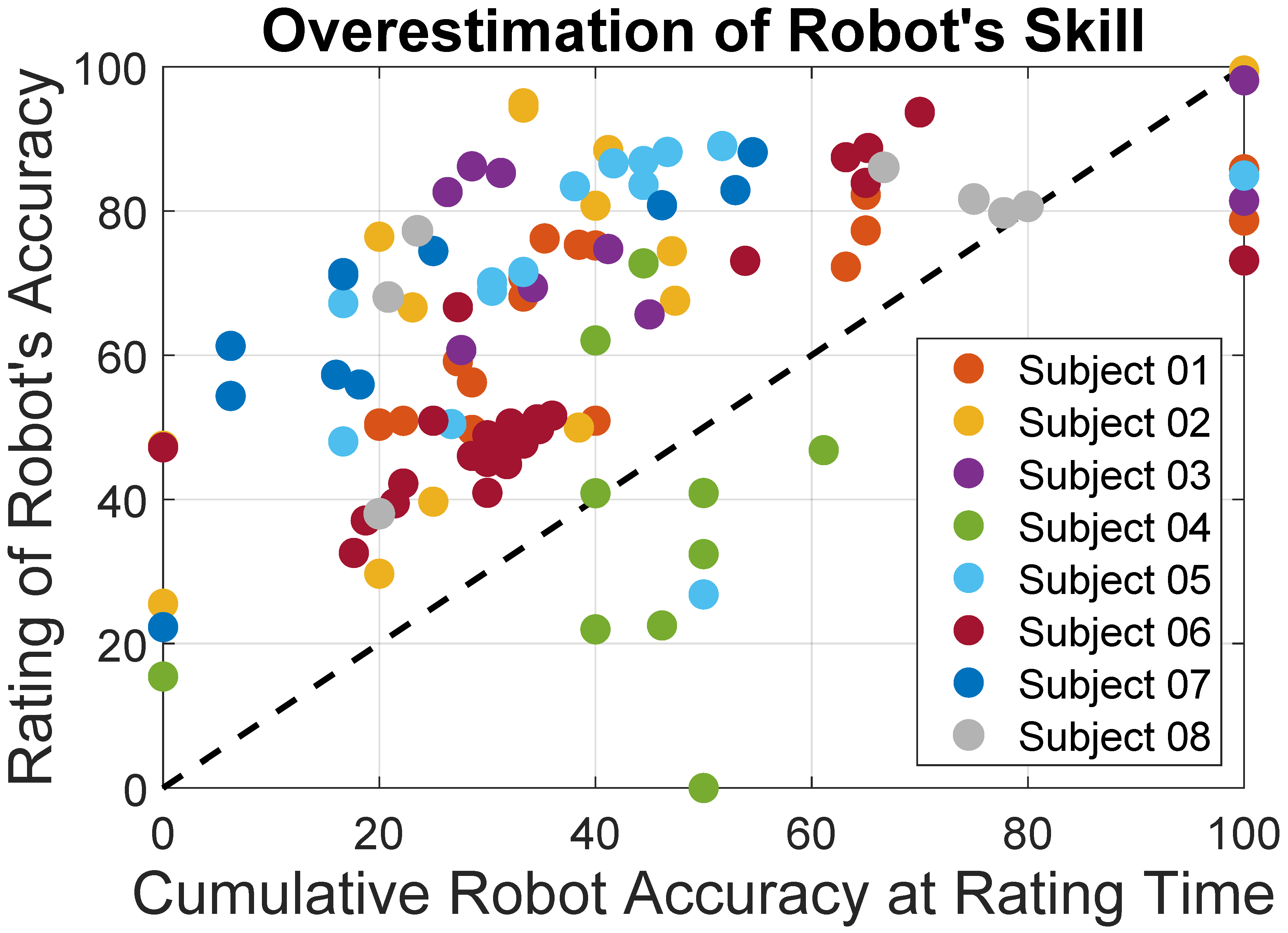

While the robot learned to grasp, the users also rated how good they thought the robot was at the grasping task. Comparing these ratings to the robot's actual accuracy indicates that the people tended to overestimate the robot's skill. Further analysis also suggests that they may overestimate changes in the robot's skill - they may think that the robot improved or decreased more than it actually did. These trends would need to be explored with more subjects in the future, but they suggest interesting considerations for promoting successful human-robot team dynamics.

|

| Users tended to overestimate the robot's skill. Preliminary results also suggest that they may tend to overestimate changes in the robot's skill, and to generalize its abilities to new situations. |

Virtual Presentation

Publications

Project Members

Joseph DelPreto, Jeffrey I. Lipton, Lindsay Sanneman, Aidan J. Fay, Christopher Fourie, Changhyun Choi, and Daniela Rus

Related Links

Contact us

If you would like to contact us about our work, please refer to our members below and reach out to one of the group leads directly.

Last updated Aug 12 '20