Project

Controlling drones and other robots with gestures

From spaceships to Roombas, robots have the potential to be valuable assistants and to extend our capabilities. But it can still be hard to tell them what to do - we'd like to interact with a robot as if we were interacting with another person, but it's often clumsy to use pre-specified voice/touchscreen commands or to set up elaborate sensors. Allowing robots to understand our nonverbal cues such as gestures with minimal setup or calibration can be an important step towards more pervasive human-robot collaboration.

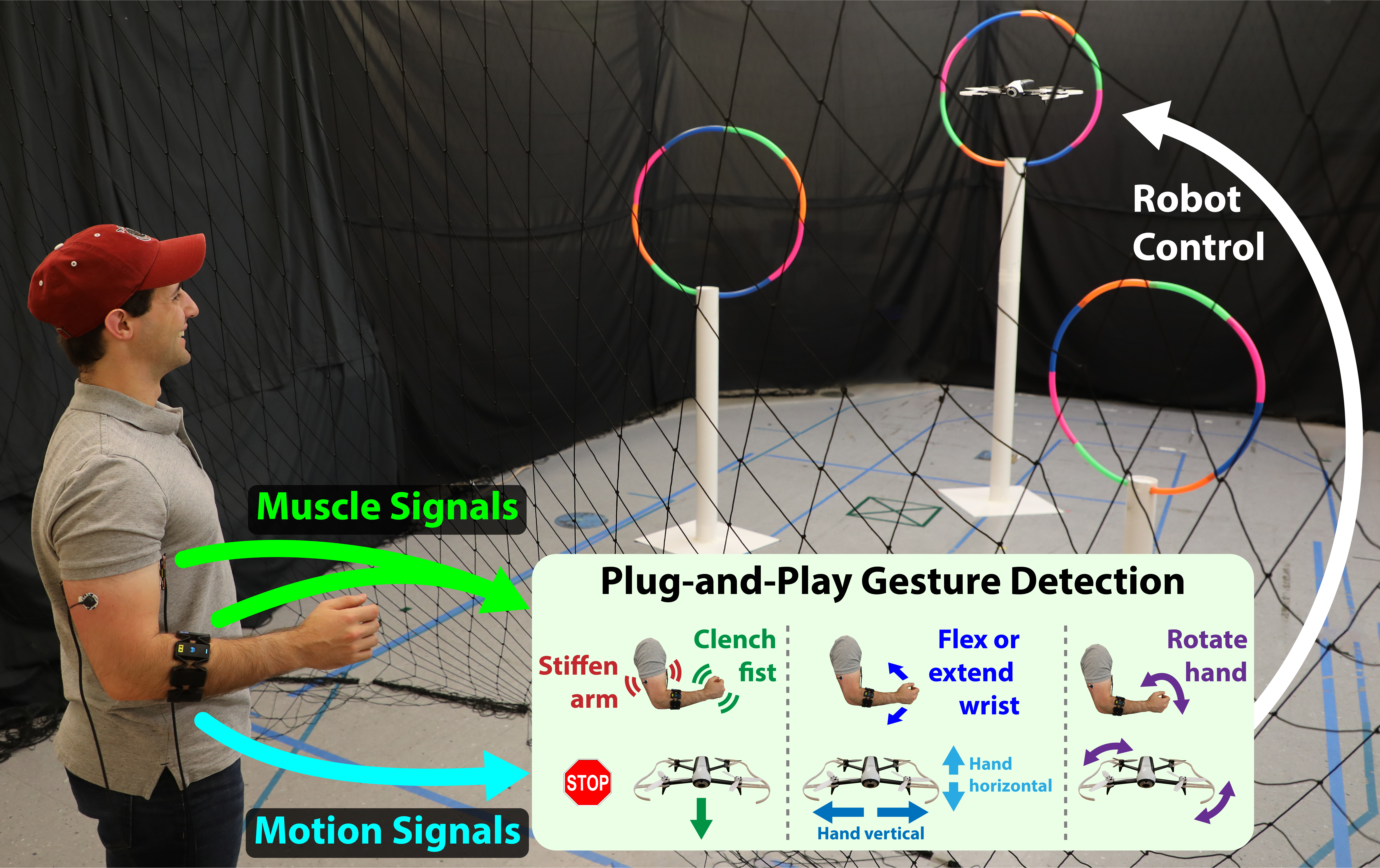

This system, dubbed Conduct-a-Bot, aims to take a step towards these goals by detecting gestures from wearable muscle and motion sensors. A user can make gestures to remotely control a robot by wearing small sensors on their biceps, triceps, and forearm. The current system detects 8 predefined navigational gestures without requiring offline calibration or training data - a new user can simply put on the sensors and start gesturing to remotely pilot a drone.

By using a small number of wearable sensors and plug-and-play algorithms, the system aims to start reducing the barrier to casual users interacting with robots. It builds an expandable vocabulary for communicating with a robot assistant or other electronic devices in a more natural way. We look forward to extending this vocabulary to additional scenarios and to evaluating it with more users and robots.

A gesture vocabulary enables remote robot control using muscle and motion sensors |

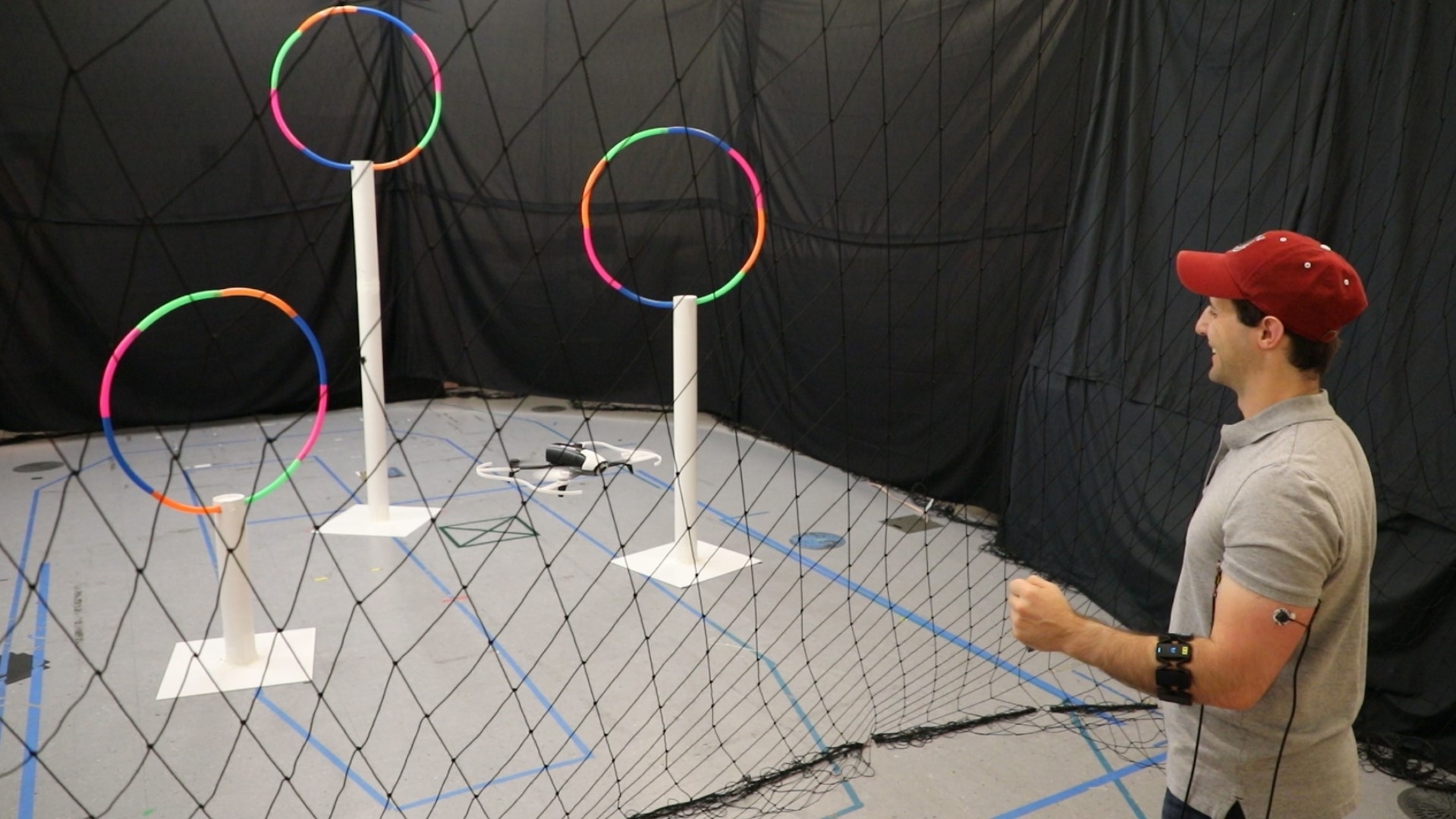

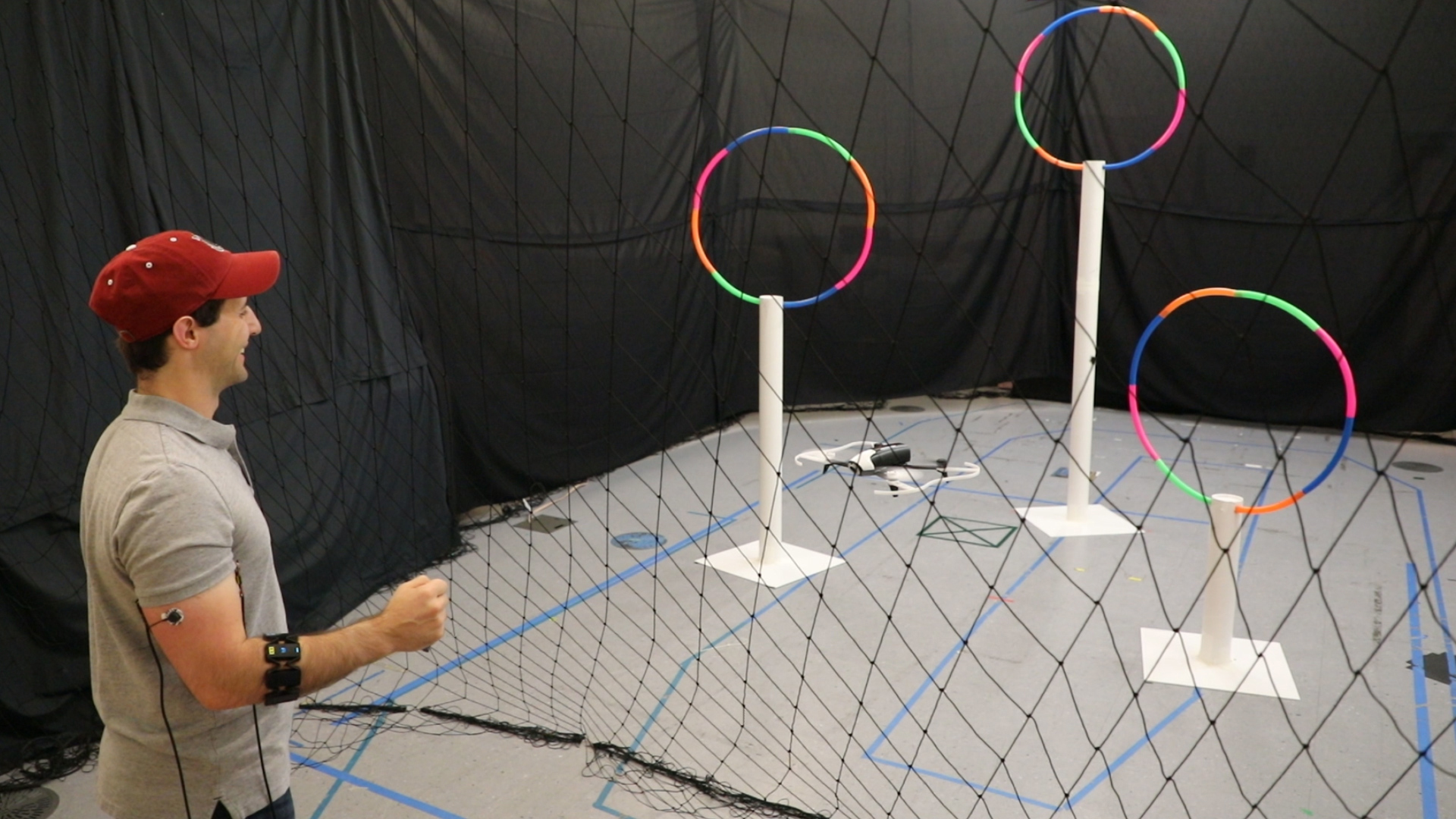

Pilot a drone through hoops using gestures and wearable sensors |

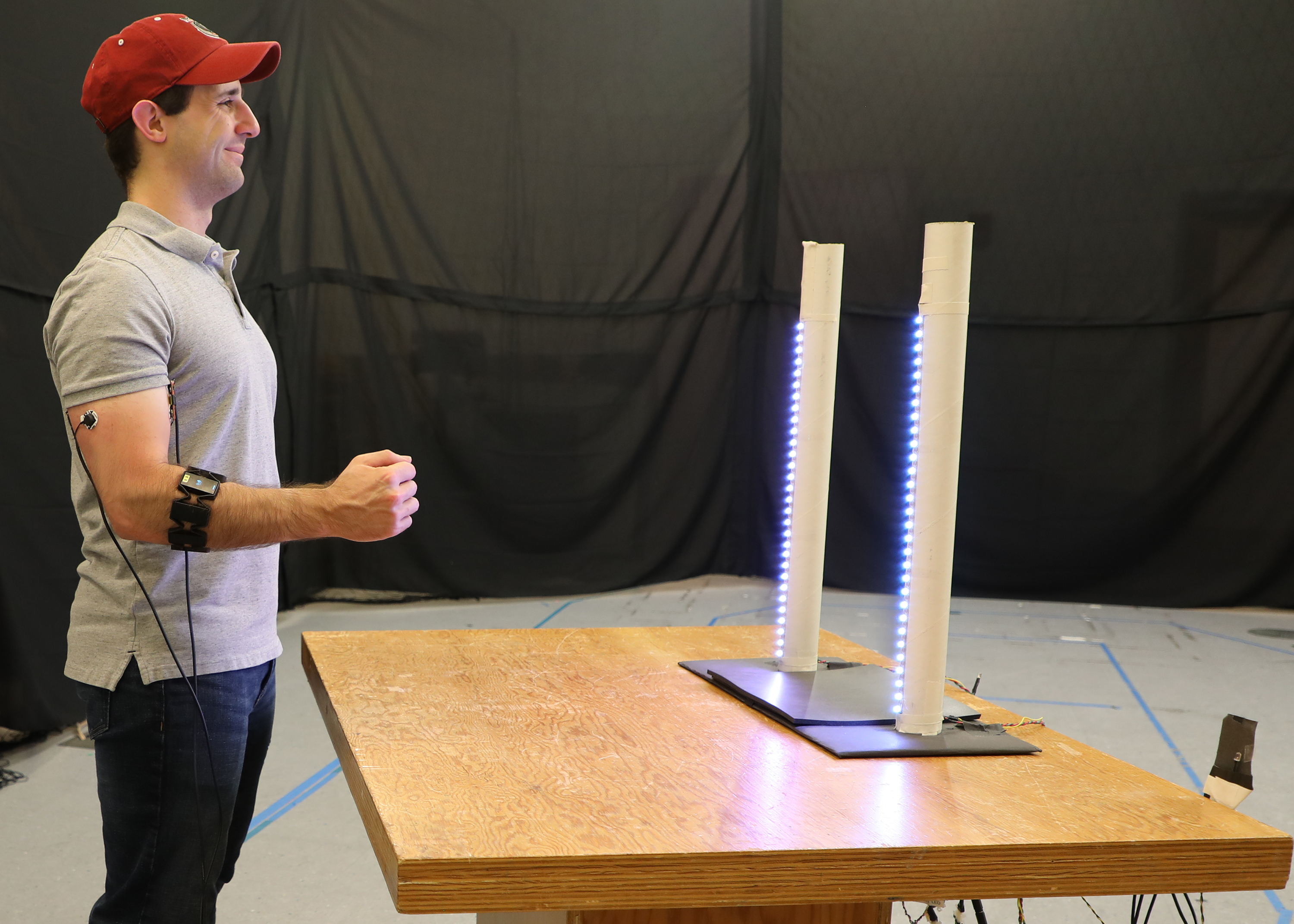

Experiments included sessions that cued gestures with LEDs |

Current experiments used a Parrot Bebop 2 drone |

Conference Media: Human-Robot Interaction 2020 (HRI '20)

Virtual Presentation

Demo Video

Sensors: Wearable EMG and IMU

Gestures are detected using wearable muscle and motions sensors. Muscle sensors, called electromyography (EMG) sensors, are worn on the biceps and triceps to detect when the upper arm muscles are tensed. A wireless device with EMG and motion sensors is also worn on the forearm.

In the current experiments, MyoWare processing boards with Covidien electrodes and an NI data acquisition device were used to stream biceps and triceps activity. The Myo Gesture Control Armband was used to monitor forearm activity. Alternative sensors and acquisition devices could be substituted in the future.

EMG sensors monitor biceps, triceps, and forearm muscles. |

The forearm device includes EMG electrodes, an accelerometer, and a gyroscope. |

Gesture Detection: Classification Pipelines

Machine learning pipelines process the muscle and motion signals to classify 8 possible gestures at any time. For most of the gestures, unsupervised classifiers process the muscle and motion data to learn how to separate gestures from other motions in real time; Gaussian Mixture Models (GMMs) are continuously updated to cluster the streaming data and create adaptive thresholds. This lets the system calibrate itself to each person's signals while they're making gestures that control the robot. Since it doesn't need any calibration data ahead of time, this can help users start interacting with the robot quickly.

In parallel with these classification pipelines, a neural network predicts wrist flexion or extension from forearm muscle signals. The network is trained on data from previous users instead of requiring new training data from each user.

|

Recorded muscle and motion signals |

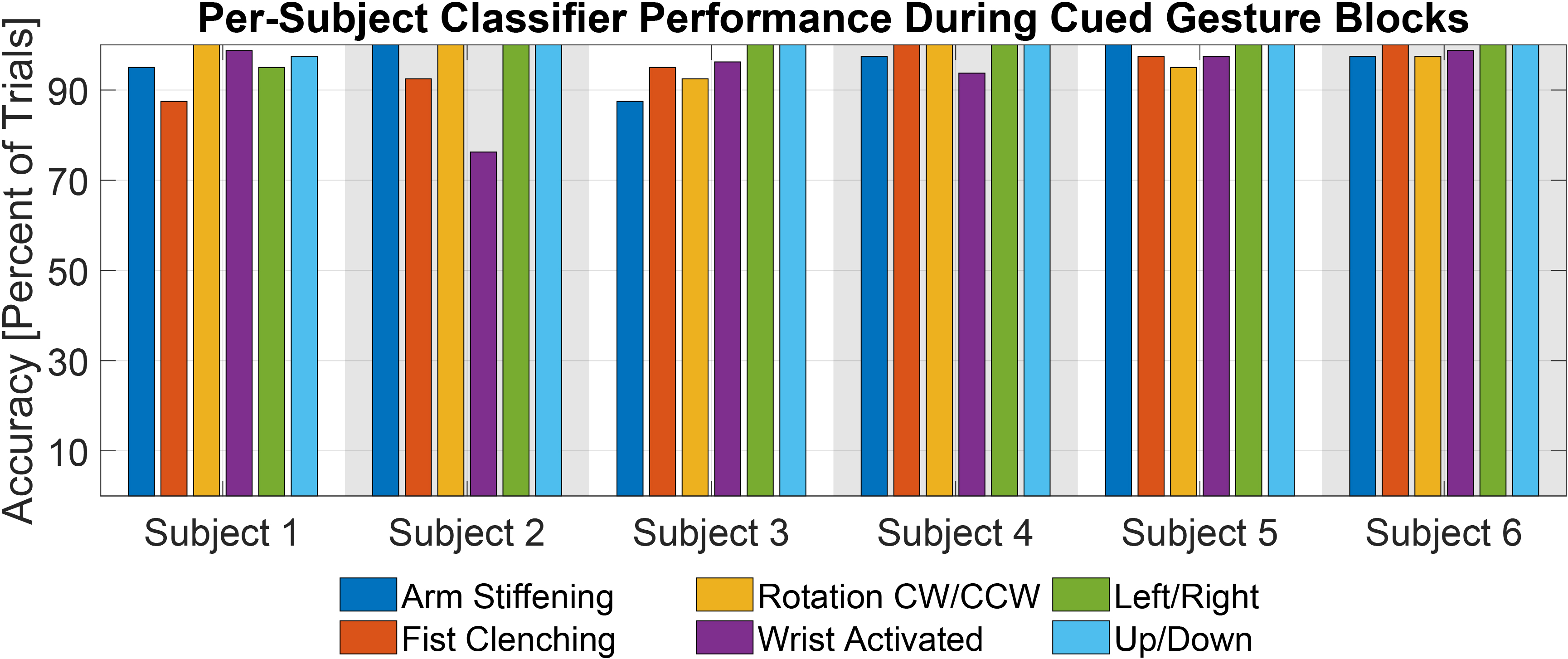

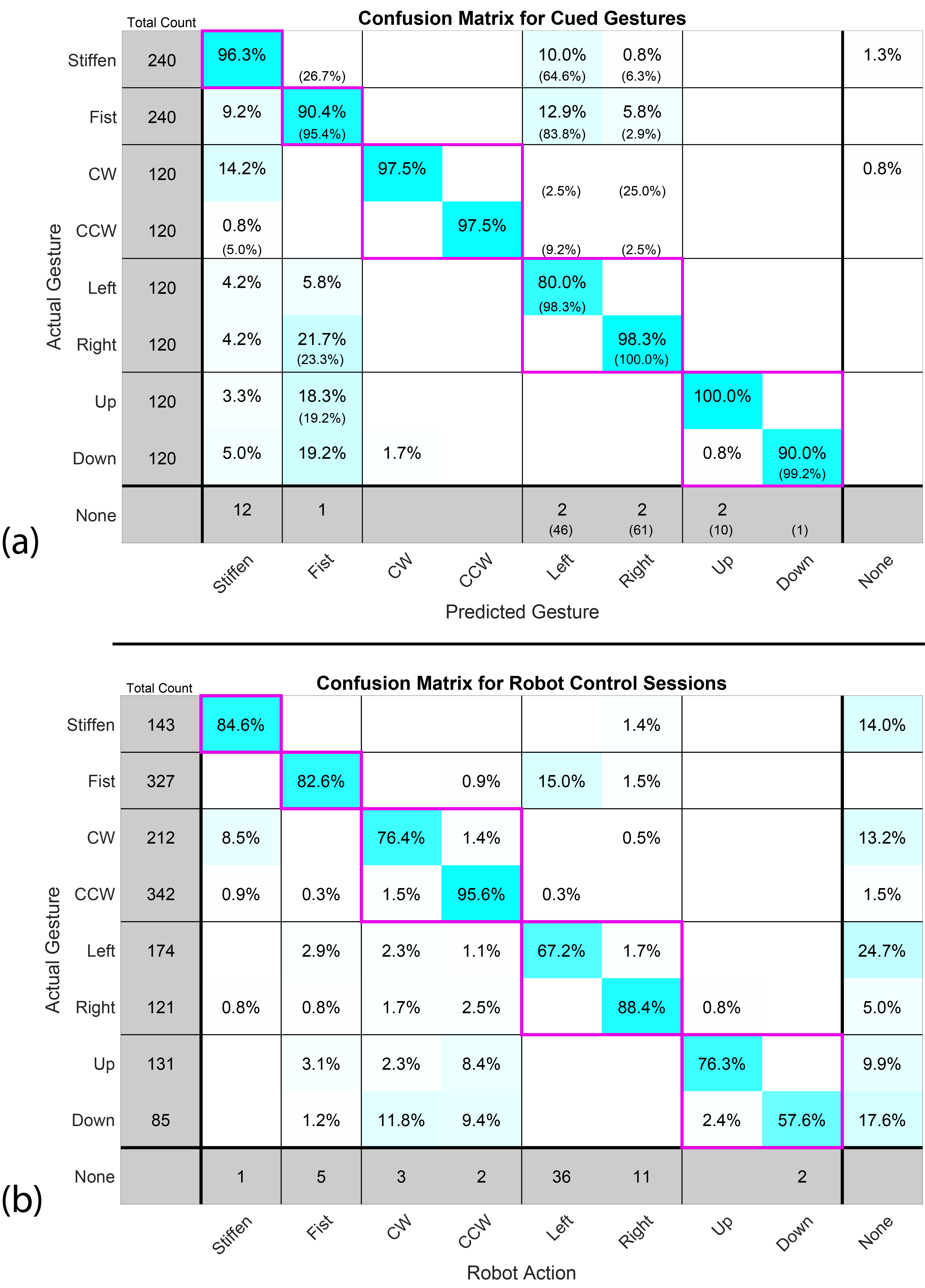

Classification performance for cued gestures |

Classification performance for cued gestures and during unstructured robot control |

Publications

In the News

Related Links

Contact us

If you would like to contact us about our work, please refer to our members below and reach out to one of the group leads directly.

Last updated Aug 12 '20