PI

Core/Dual

Saman Amarasinghe

During the last half century, Moore’s Law provided the fuel for the information revolution. By doubling the available compute power every two years, programmers were provided ample resources to increase the computation needs of existing algorithms, innovate novel algorithms, and improve programmer productivity for rapid development of new capabilities. The extraordinary momentum and unprecedented impact of Moore’s Law on the world seemed as if it would last forever, and optimizing the use of resources — a cornerstone of all other engineering disciplines — was the last thing in programmers’ minds.

Now, though, Moore’s Law is coming to an end. As a result of our reliance on Moore’s Law without regard for optimizing performance, most computer software is heavily bloated and inefficient. In order to make the Moore’s Law gradient last longer to keep driving innovation, we must focus on performance engineering when programming applications.

Professor Saman P. Amarasinghe’s research takes just this approach. Prof. Amarasinghe leads the Commit compiler group in MIT CSAIL, which focuses on programming languages and compilers that maximize performance on modern computing platforms. He is a world leader in the field of high-performance, domain-specific languages for targeted application domains such as image processing, stream computations, and graph analytics. He also pioneered the use of machine learning for compiler optimization. In addition, he brings his expertise to industry: His entrepreneurial activities include founding the company Determina, Inc., which was acquired by VMWare in 2007, and he co-founded Lanka Internet Services, Ltd., which was the first internet service provider in Sri Lanka. He is the faculty director of MIT’s Global Startup Labs, whose summer programs in 17 countries help to create more than 20 thriving startups. He joined MIT as a faculty member in 1997 and was elected as an ASM fellow in 2019.

Prof. Amarasinghe works to find novel approaches to improve the performance of computer systems from the angle of programming languages and compilers. Programming languages tell computers what to do in a precise way, and compilers take the high-level descriptions of programs and map them in a way that can be run on the computers. Compilers bring programs from high-level programming language to a simple machine language. One area of research Prof. Amarasinghe is looking into is new programming languages in different computation domains (from image processing to quantum chromodynamics) and maximizing performance in specific areas in these domains. Domain-specific, high-performance compilers will help researchers in various domains get the performance they need to focus on research experiments, instead of spending a majority of their time writing and optimizing code.

The tools we have today are opening up more doorways to programmer productivity and high-performance compilers. Machine learning, for example, can be trained on the massive amounts of programming data, millions and millions of lines of code, and the compiler can learn to optimize and generate code and keep up with all of the changes happening in the architecture in a faster, more responsive way than a compiler that has mostly human-written heuristics.

Projects led by Prof. Amarasinghe include domain-specific languages Halide and Simit. Halide is specific to image processing, and addresses the challenge of getting high performance out of image processing pipelines that compose multiple stencil computations, complex reductions, and global or data-dependent access patterns as stages connected in a complex stream program. Halide is becoming the industry standard language for image processing and is heavily adopted by Google, Adobe, Facebook, and Qualcomm. Simit is a language that makes it easy to compute on sparse systems using linear algebra. Simit programs are often simpler and shorter than equivalent MATLAB programs, yet are comparable in performance to hand-optimized codes.

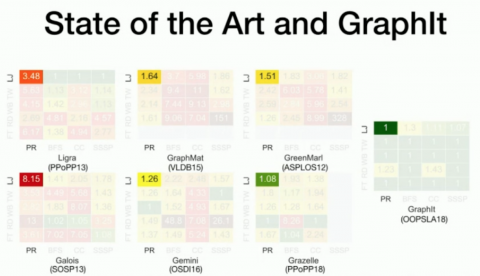

Prof. Amarasinghe is also working with industry on projects such as the Tensor Algebra Compiler (Taco) for dense and sparse linear and tensor algebra expressions, and is investigating the use of synthetic data to improve the privacy of data and data security for organizations.

The end of Moore’s Law may be near, but by rethinking programming using Domain-Specific Languages with an eye on performance and using new tools and novel approaches, we can continue to fuel the trajectory of innovation we have enjoyed for over half a century.

BIO

Saman P. Amarasinghe is a Professor in the Department of Electrical Engineering and Computer Science. He leads the Commit compiler group. His research interests are in discovering novel approaches to improve the performance of modern computer systems and make them more secure without unduly increasing the complexity faced by the end users, application developers, compiler writers, or computer architects. Saman received his BS in Electrical Engineering and Computer Science from Cornell University in 1988, and his MSEE and Ph.D from Stanford University in 1990 and 1997, respectively.

Related Links

Last updated Dec 17 '20