When you search sites like Amazon they present you results in the format that they want - sponsored results up top, and, as some sleuths have discovered, sometimes prioritizing their own products over others.

But what if you, the user, had more control over how you could look at information on sites like Amazon, eBay or Etsy?

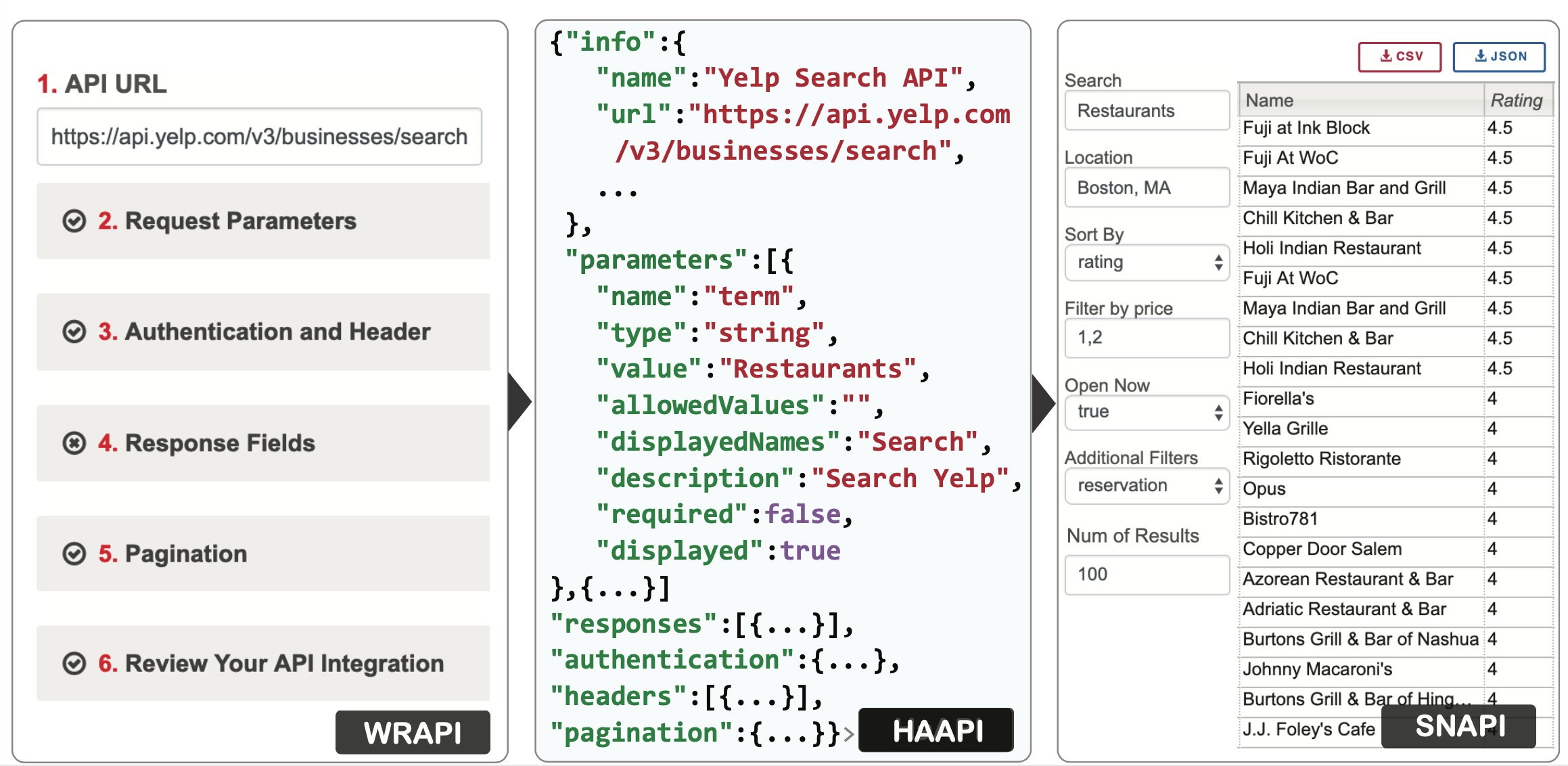

CSAIL researchers have created a new tool to help make it easier to access web data from companies’ “application programming interfaces” (APIs). Dubbed ScrAPIr, the tool enables non-programmers to access, query, save and share web data APIs so that they can glean meaningful information from them and organize data the way they want.

For example, the team showed that they can organize clothing on Etsy by the year or decade it was produced - a step beyond the website’s own binary option of “new” or “vintage.” (Amazon, alas, recently restricted API access to vendors, so you’re out of luck if you want to use ScrAPIr to, say, search breakfast cereal by unit price.)

“APIs deliver more information than what website designers choose to expose it, but this imposes limits on users who may want to look at the data in a different way,” says MIT professor Karger, senior author of a new paper about ScrAPIr. “With this tool, *all* of the data exposed by the API is available for viewing, filtering, and sorting.”

The team also developed a special repository of more than 50 COVID-19-related data sources that the research team is encouraging journalists and data scientists to comb for trends and insights.

“An epidemiologist might know a lot about the statistics of infection but not know enough programming to write code to fetch the data they want to analyze,” says Karger. “Their expertise is effectively being blocked from being put to use.”

Being able to access APIs has traditionally required strong coding skills, which means that such data-wrangling has generally only been reserved for organizations that can afford programmer resources.

The only alternative for non-coders is to laboriously copy-paste data or use web scrapers that download a site’s webpages and parse the content for desired data. Such scrapers are error-prone as they cope with ever more complex and dynamic websites.

“How is it fair that only people with coding skills have the advantage of accessing all this public data?” says graduate student Tarfah Alrashed, lead author of the new paper.. “One of the motivations of this project is our belief that information on the web should be available for anyone, from small-town reporters investigating city spending to third-world NGOs monitoring COVID-19 cases.”

To integrate a new API into ScrAPIr, all a non-programmer has to do is fill out a form telling ScrAPIr about certain aspects of the API.

Beyond its application for non-coders, the tool was also shown to allow programmers to perform important data-science tasks like wrapping and querying APIs nearly four times faster than if they wrote new code themselves.

Alrashed says that user feedback to ScrAPIr has been largely positive. One participant described it as “the type of tool needed to standardize API use across available open source datasets.”

There have been other efforts to make APIs more accessible. Chief among them is the creation of languages like OpenAPI that provide structured machine-readable descriptions of web APIs that can be used to generate documentation for programmers. Alrashed says that, while OpenAPI improves the developer experience, it’s missing several key elements needed to make it useful for non-programmers.

Alrashed and Karger developed ScrAPIr with CSAIL PhD student Jumana Almahmoud and former PhD student Amy X. Zhang, now a postdoc at Stanford.