Project

Sensible Deep Learning for 3D Data

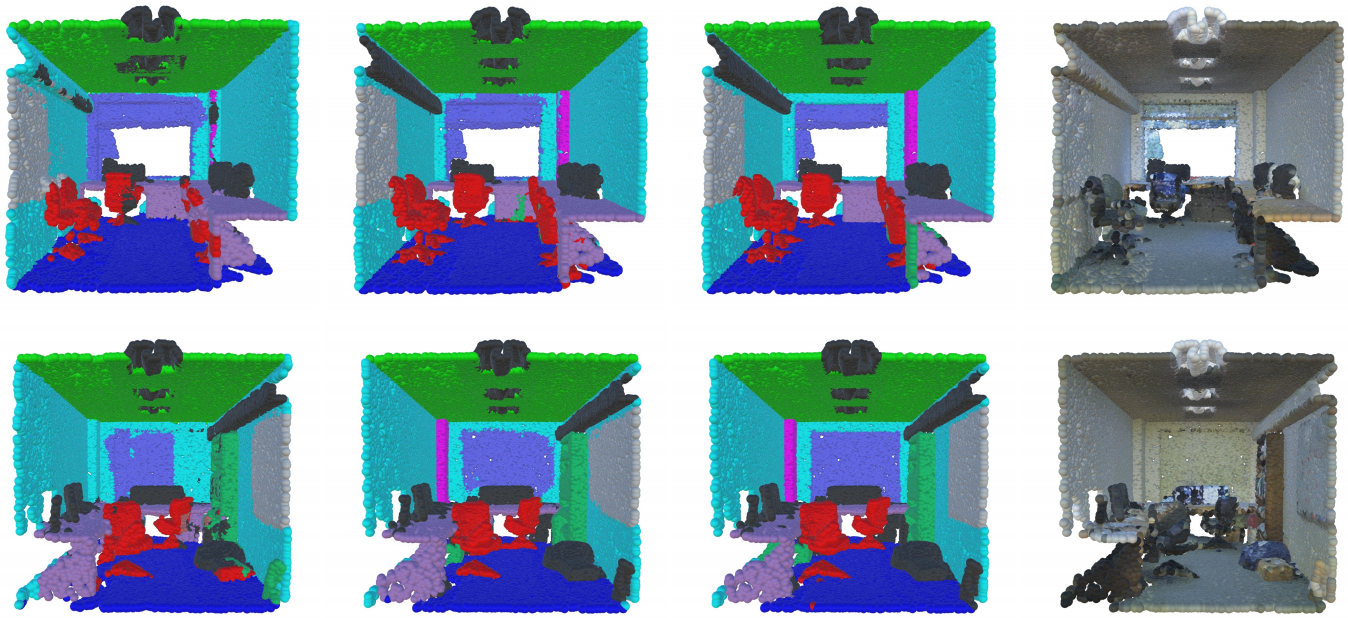

Spurred by 3D sensing modalities and the demands of applications like autonomous driving, the availability of geometric data is following a growth trajectory similar to that of text and imagery. New huge-scale databases of point clouds, meshes, and CAD models provide critical information about the "space of shapes" likely to be encountered while navigating new or dynamically-changing environments. Machine learning on this species of data provides critical semantically-motivated clues for understanding surroundings, manipulating objects, and avoiding complex obstacles.

Geometric data, however, presents challenges that do not apply to text or imagery. Surfaces and volumes are not easily expressed on pixel grids, and trivial changes like rotation and scaling drastically affect how they are stored. Even so, geometric data is by no means unstructured but rather has extremely elegant structure that simply is not the same as what we leverage for image or video processing.

In this project, we are developing deep learning tools designed from the ground up for 3D data, specifically point clouds, triangulated surfaces, and CAD models; these modalities undoubtedly are used widely in applications from design to manufacture to robotic navigation using e.g. LiDAR. Rather than shoehorning existing techniques for images or unstructured problems, we start from well-studied constructions from differential geometry and digital geometry processing, which provide well-posed replacements for operations like convolution in the presence of curvature.

Applications of the machinery we are developing are extremely broad: Intelligent and semantically-aware 3D shape processing underpins robotics/vision tasks like grasping and navigation and underlies design of models for manufacture.

Related Links

Contact us

If you would like to contact us about our work, please refer to our members below and reach out to one of the group leads directly.

Last updated Jan 25 '20