Research Group

Multimodal Understanding Group

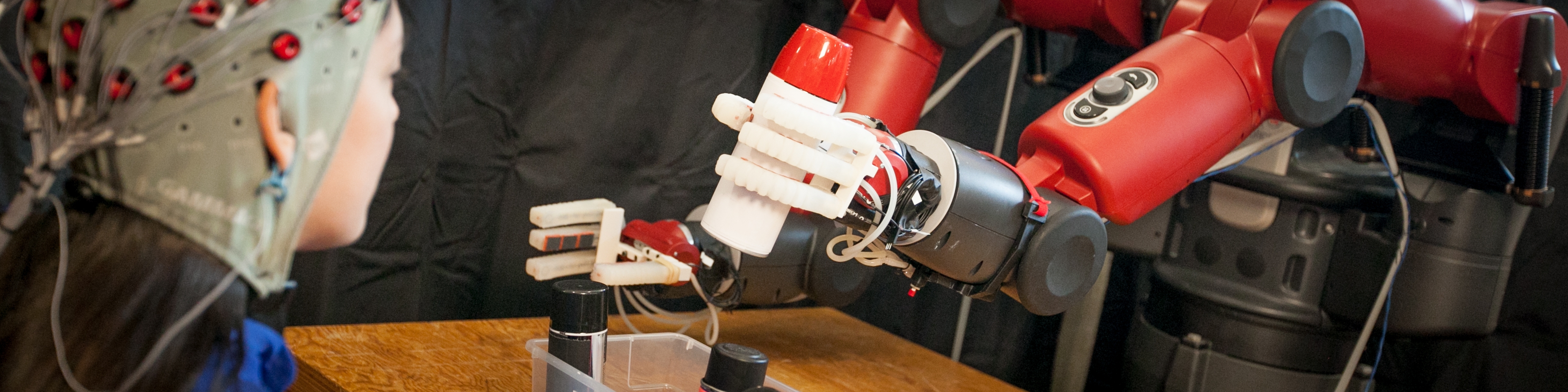

We seek to make possible the effortless use of gesture, sketching, speech and other modalities to enable natural interaction with computation.

Our research focuses on three areas.

- Our work in gesture centers on building and testing systems that understand body- and hand-based gestures.

- Our sketch-understanding work enables computers to understand the kinds of informal drawings routinely made in a wide variety of design and other tasks.

- Our work on multimodal interaction builds systems that integrate sketching, gesture and speech, leveraging the lab's speech understanding and natural language processing research.

Related Links

Contact us

If you would like to contact us about our work, please refer to our members below and reach out to one of the group leads directly.

Last updated Sep 03 '17