Every year there are thousands of fatal car accidents that happen as a result of distracted driving. But a team from MIT says that for years the public has been distracted by the wrong distracted-driving narrative.

Much of the driving research has focused on the specific task that a driver distracts himself with, like looking at directions or reading text messages. However, a new MIT study shows that the much bigger issue is the actual act of looking away from the road.

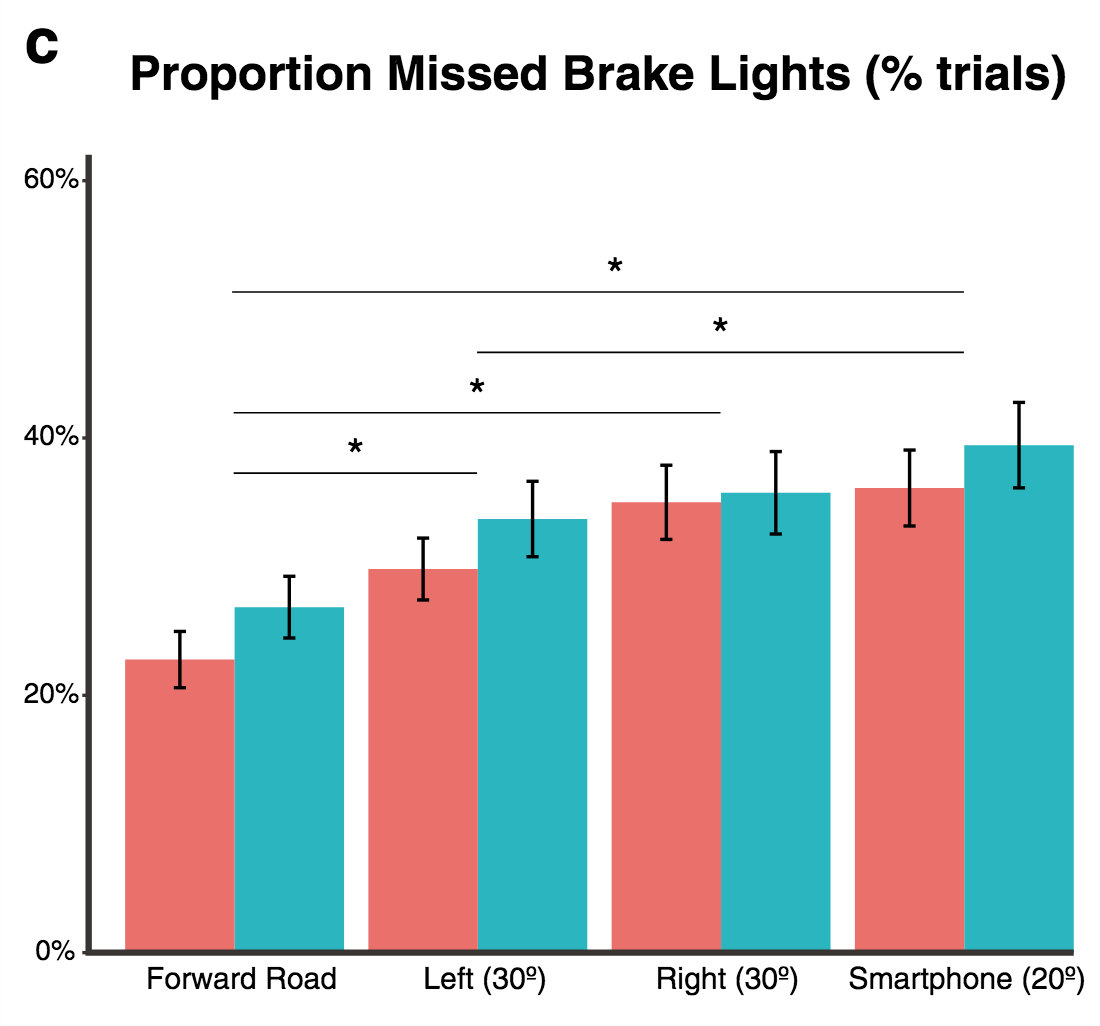

Specifically, researchers showed that the act itself of looking away slowed people’s reaction times by an average of almost half a second - which, at a driving speed of 75 miles per hour, would mean missing somebody slamming on the brakes a full three car lengths in front of you. For comparison, that effect of glancing down was found to be 10 times worse than the effect caused by the “cognitive load” of the distracting task itself.

“We’re not saying that the details of whatever you’re doing on your phone can’t also be an issue,” says MIT postdoc Benjamin Wolfe, lead author on a paper about the study that was published this week in the Attention, Perception, & Psychophysics journal. “But in distinguishing between the task and the act of switching gears itself, we’ve shown that taking your eyes off the road is actually the bigger problem.”

Co-author Ruth Rosenholtz says that taking your eyes off the road forces you to use your peripheral vision, which is limited in how well it can pick up on subtle stimuli like brake lights.

“[With peripheral vision] you can detect actions like braking, but you’re going to be much slower extracting the information you need and responding to them in real-time,” says Rosenholtz, a principal research scientist at MIT.

The new study involved participants watching driving videos while looking either at the road ahead or at an angle comparable to where a smartphone would be mounted on their dashboard. They were then given a distracting task to complete while using their peripheral vision to monitor their lane for brake lights. Wolfe’s team found that cognitive load slowed times by an average of only 35 milliseconds - an effect much smaller than looking away (450 milliseconds).

Wolfe says that, while peripheral vision helps drivers learn more about what’s around them, it isn’t enough on its own to ensure that they’re driving safely.

“If you’re looking down at your phone in the car, you may be aware that there are other cars around,” says Wolfe. “But you most likely won’t be able to distinguish between things like whether a car is in your lane or the one next to you.”

Given the dangers of looking away from the road, Wolfe suggests that drivers use their phones’ voice-enabled assistants for things like directions.

“There’s obviously still cognitive distraction at play when you’re talking to Siri or Alexa,” says Wolfe. “But with all else being equal, it’s much better to be looking at the road than not.”

Another potential improvement would be to develop better “heads-up displays’ (HUDs) that appear directly on the windshield. However, at the moment such technologies still have problems, like their visibility in daylight.

Wolfe and Rosenholtz co-wrote the journal article with research scientist Bryan Reimer and former MIT postdoc Ben D. Sawyer, alongside postdoc Anna Kosovicheva of Northeastern University. The project is a joint collaboration involving MIT’s Computer Science and Artificial Intelligence Lab, the Department of Brain and Cognitive Sciences, and the AgeLab.

As a next step Wolfe and colleagues will be conducting studies to look at how quickly drivers can detect people, animals and other things that might appear on the road.

“In the big picture, we’re interested in how drivers acquire the information they need to drive - and all the things that can get in the way of that,” says Rosenholtz.

The project was supported in part by the Toyota Research Institute.