Have you ever looked at a painting by Monet or Picasso and wondered, “how’d they do that?”

With a little help from artificial intelligence, that’s now possible: a team from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) just developed a machine learning system that can take an image of a finished painting and create a time-lapse video depicting how it was most likely to have been painted by the original artist.

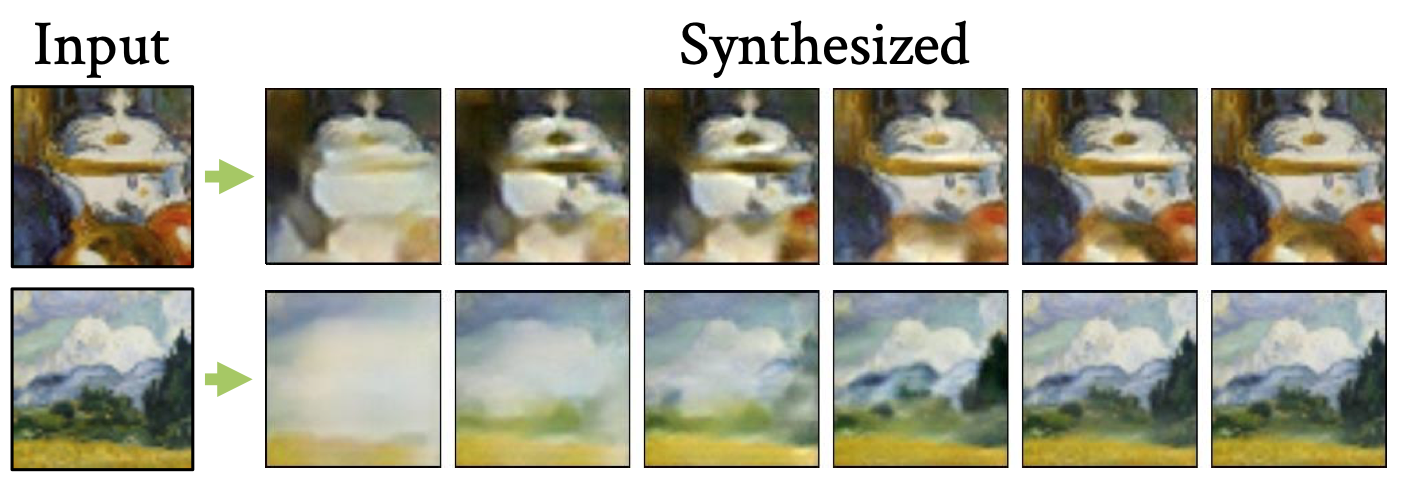

The system, which the team calls “Timecraft,” was trained on more than 200 existing time-lapse videos that people posted online of both digital and watercolor paintings. From there, the team created a convolutional neural network that can look at a new painting that it’s never seen before, and figure out the most likely way it was created.

The researchers tested Timecraft by having it generate videos of paintings that have real timelapse videos, and doing an online survey asking participants which ones seemed most realistic. Timecraft videos outperformed existing benchmarks more than 90 percent of the time, and was actually confused for the real videos nearly half of the time.

A side effect of Timecraft’s reverse-engineering abilities is that it’s given researchers some insight into artists’ creative process. The timelapses showed that painters usually work in a coarse-to-fine manner, starting with “the big picture” before filling in details. They also tend to paint within one single section of a scene, using only one or two colors at a time.

Code for the project is available on GitHub.

CSAIL PhD student Amy Zhao co-wrote a paper about Timecraft with postdoc Guha Balakrishnan, graduate student Kathleen M. Lewis, professors Frédo Durand and John V. Guttag, and research scientist Adrian V. Dalca. It was virtually presented this week at the Conference for Computer Vision and Pattern Recognition (CVPR).