For data-driven companies like Google and Amazon there may be no task more important these days than sorting through all that data. For years scientists have worked to develop better sorting algorithms, often using an approach called “radix-sorting” for data that can be viewed as a sequence of integers.

However, to sort large datasets, most radix-sorting algorithms require significant amounts of memory far beyond what’s available on a conventional consumer laptop. Making them more memory-efficient would open up a mountain of memory-intensive computing tasks, from analyzing genome sequences to crawling web-data.

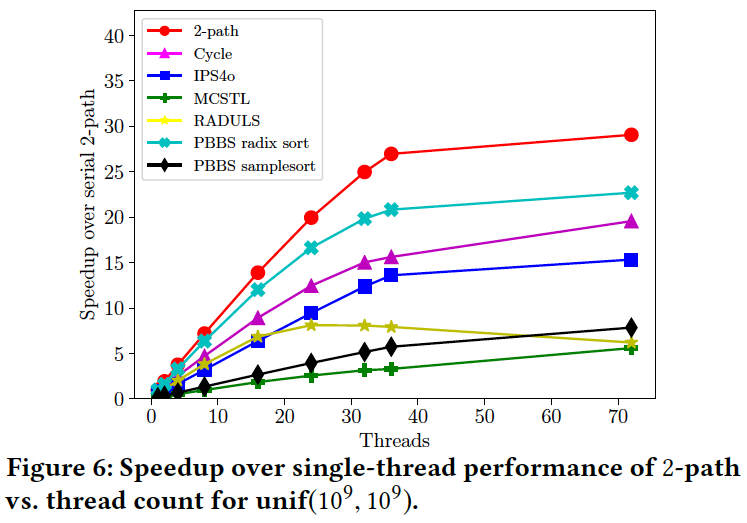

With that in mind, a team from MIT CSAIL has developed a new radix-sorting algorithm called Regions Sort which is up to four times faster than similar algorithms while using half the memory.

“Our goal is to reduce the amount of memory needed so that scientists can sort data with smaller machines, or even compute on much larger datasets using the same machine,” says MIT professor Julian Shun, who co-wrote a new paper on the topic that will be presented this month at the ACM Symposium on Parallelism in Algorithms and Architectures (SPAA) in Phoenix.“With [Regions Sort] we’re excited to have developed something that’s efficient in both theory and practice.”

Graduate student Omar Obeya compares the problem to having a team of robots trying to arrange a bunch of chairs in a classroom. If the room is only just big enough to fit all the chairs, it’s a challenge to sort them all at once, since two robots might each try to put a chair in the same spot at the same time.

Existing algorithms do the equivalent of assuming that the amount of extra space is proportional to the number of chairs, and basically can’t work unless you double the size of the room.

In contrast, Regions Sort essentially increases the size of the room by just a small amount, and uses that space to plan the movements for the robots so that they can work in parallel without running into each other. It also improves performance by minimizing the amount of data that’s moved around, which is often a bottleneck for sorting large inputs. (More technically, the algorithm uses a graph structure to model dependencies across elements that need to be swapped, and generates independent tasks from this graph that can be executed in parallel.)

“We think this algorithm will be useful to researchers with large datasets who don’t want to have to double their memory usage,” says Obeya, lead author on the new paper alongside Shun and undergraduates Endrias Kahssay and Edward Fan. “It could be particularly helpful for people who want to crunch these kinds of data points on their own personal laptops.”

In tests, the researchers demonstrated that Regions Sort is very competitive on a wide range of input types and sizes. The researchers also showed strong theoretical bounds on the performance of the algorithm, which ensures that it works well in many settings. Shun says that the team wanted to make sure the algorithm was robust enough to handle potential future cases of much larger datasets or next-generation computers with significantly more parallel processing units.

The algorithm is considered a “parallel in-place radix-sorting algorithm” because it uses significantly less additional memory than the input size. Shun says that it uses at most 5 percent more memory than the input data itself, compared to similar algorithms that would use at least as much additional memory as the input itself. (He says that, for larger datasets, the percentage of additional memory would be even lower for the algorithm because the additional memory is not proportional to the size of the input data.)

“The researchers make use of an innovative idea based on what they call a regions graph, which allows taking what seems like an inherently sequential process of shuffling the data and making it parallel,” says Guy Blelloch, a professor of computer science at Carnegie Mellon University who was not involved in the research. ““They show how to sort integers with minimal extra space while still achieving the fastest times available on multicore servers.”

The team’s next step is to adapt their method for general sorting algorithms. They also hope to explore similar algorithms that would work in areas like graph search, which is widely used by companies like Facebook and Amazon to suggest friends and recommend products.

The paper was supported in part by the Applications Driven Architectures Research Center, the Defense Advanced Research Projects Agency, the Department of Energy and the National Science Foundation.