Roughly 2,400 years ago Plato, the Greek philosopher, touted his belief that our eyes work by emitting beams of light. While certainly an innovative thought, it’s incorrect, but speaks to early obsessions with studying optical phenomena that have carried us to the present: artificial vision systems inspired by biology.

Giving our hardware sight has empowered a host of applications in self-driving cars, object detection, and crop monitoring, but, unlike animals, synthetic vision systems can’t simply evolve under natural habitats. Dynamic visual systems that can navigate both land and water, therefore, have yet to power our machines – leading scientists from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL), the Gwangju Institute of Science and Technology (GIST), and Seoul National University in Korea, to develop a novel artificial vision system that closely replicates the vision of the fiddler crab that tackles both terrains. The semi-terrestrial species - known affectionately as the calling crab as it appears to be beckoning with its huge claws - has amphibious imaging ability and an extremely wide field-of-view, as all current systems are limited to hemispherical.

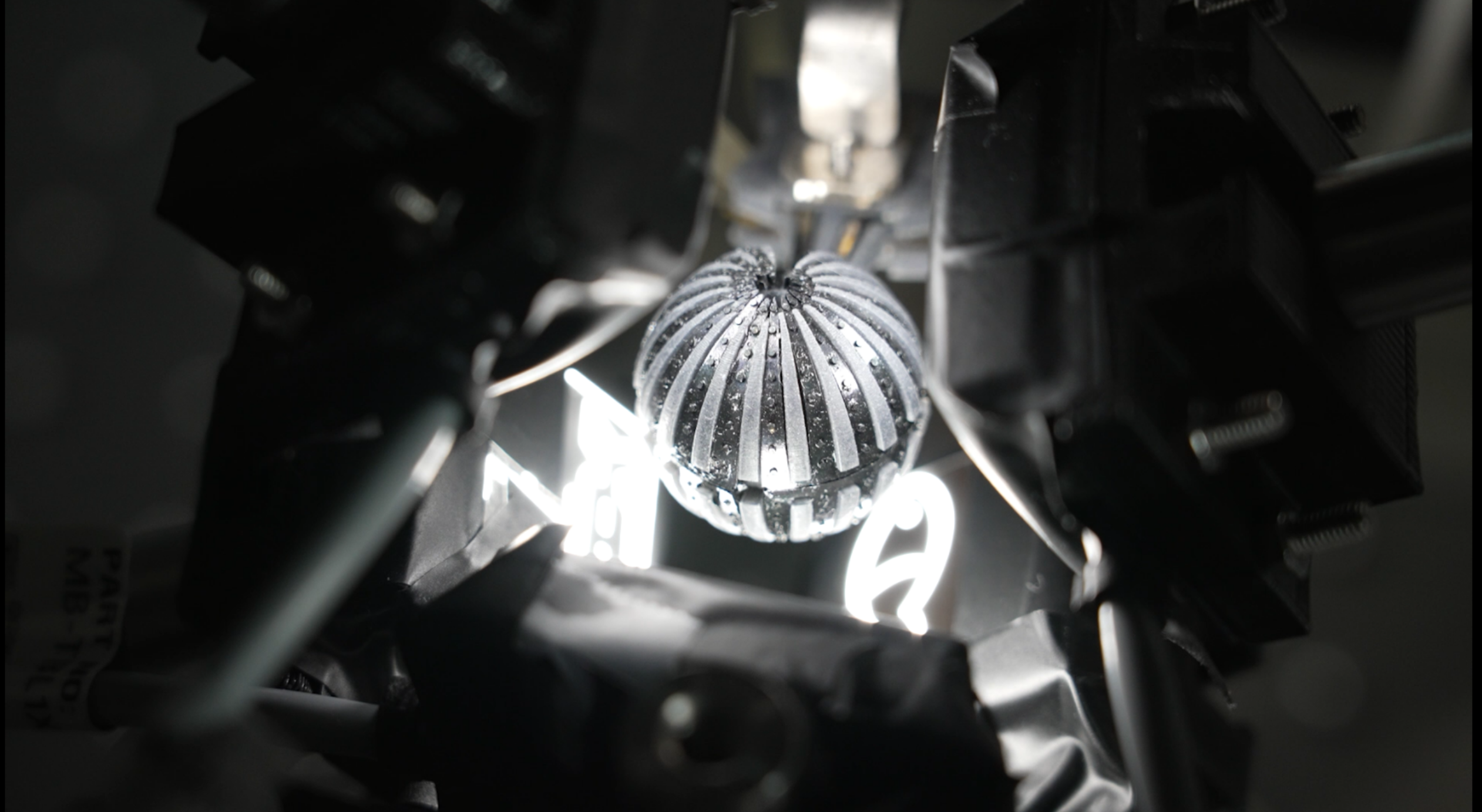

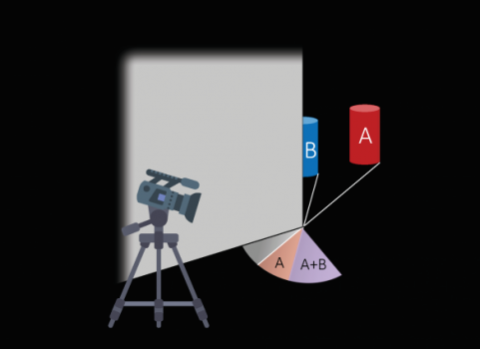

The artificial eye, resembling a spherical, largely nondescript, small, black ball, makes meaning of its inputs through a mixture of materials that process and understand light. The scientists combined an array of flat microlenses with a graded refractive index profile, and a flexible photodiode array with comb-shaped patterns, all wrapped on the 3-D spherical structure. This configuration meant that light rays from multiple sources would always converge at the same spot on the image sensor, regardless of the refractive index of its surroundings.

Both the amphibious and panoramic imaging capabilities were tested in in-air and in-water experiments by imaging five objects with different distances and directions, and the system provided consistent image quality and an almost 360° field-of-view in both terrestrial and aquatic environments. Meaning: it could see both underwater and on land, where previous systems have been limited to a single domain.

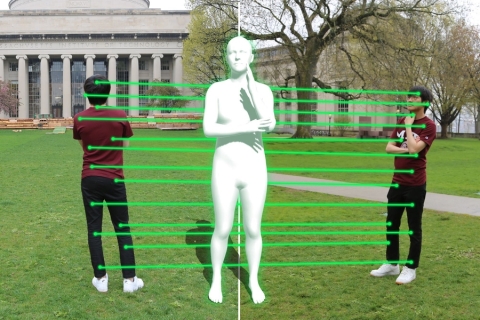

“Our system could be of use in the development of unconventional applications, like panoramic motion detection and obstacle avoidance in continuously changing environments, as well as augmented and virtual reality. Currently, the size of a semiconductor optical unit, commonly used in smartphones, automobiles, and surveillance/monitoring cameras, is restricted at the laboratory level,” says Young Min Song, professor of electrical engineering and computer Science at GIST. “However, with the technology of image sensor manufacturers such as Samsung and SK Hynix, the technological limitation is surmountable to develop a camera that is much smaller and has better imaging performance than the ones currently manufactured. We expect that the level of domestic system semiconductor technology will be advanced through the production of this new concept image sensor.”

Feeling crabby

There’s more than meets the eye when it comes to fiddler crabs. Behind their massive claws, exists powerful unique vision systems due to life both underwater and on land. Their flat corneas, combined with a graded refractive index, counters defocusing effects arising from changes in the external environment – an overwhelming limit for other compound eyes. The little creatures also have a 3-D omnidirectional field-of-view, from an ellipsoidal and stalk-eye structure. They’ve evolved to look at almost everything at once to avoid attacks on wide open tidal flats, and to communicate and interact with mates.

Biomimetic cameras aren’t new - in 2013, a wide field-of-view (FoV) camera that mimicked the compound eyes of an insect was reported in Nature, and in 2020, a wide FoV camera mimicking a fish eye emerged. While these cameras can capture large areas at once, it’s structurally difficult to exceed 180 degrees, and more recently, commercial products with 360-degree FoV have come into play. These can be clunky, though, since they have to merge images taken from two or more cameras, and to enlarge the field of view, you need an optical system with a complex configuration, which causes image distortion. It’s also challenging to sustain focusing capability when the surrounding environment changes, such as in air and underwater – hence the impetus to look to the calling crab.

The crab proved a worthy muse. During the tests, five cutesy objects (dolphin, airplane, submarine, fish, and ship), at different distances were projected onto the artificial vision system from different angles. The team performed multilaser spot imagining experiments, and the artificial images matched the simulation. To go deep, they immersed the device halfway in water in a container.

A logical extension of the work includes looking at biologically inspired light adaptation schemes in the quest for higher resolution and superior image processing techniques.

“This is a spectacular piece of optical engineering and non-planar imaging, combining aspects of bio-inspired design and advanced flexible electronics to achieve unique capabilities unavailable in conventional cameras. Potential uses span from population surveillance to environmental monitoring,” says John A. Rogers, the Louis Simpson and Kimberly Querrey Professor of Materials Science and Engineering, Biomedical Engineering, and Neurological Surgery at Northwestern University.

The paper was recently published in Nature Electronics. MIT professor Fredo Durand wrote the paper alongside 15 coauthors. This research was supported by the Institute for Basic Science, the National Research Foundation (NRF) of Korea, and the GIST-MIT Research Collaboration grant funded by the GIST in 2022. The full author list can be found here.