One of the most unique human qualities is imagination. If you can picture somebody in your head, you can probably picture them doing a range of different (hopefully PG-rated) activities.

Machines, meanwhile, are inherently unimaginative. But researchers from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) have recently taken a step towards changing that: they’ve created a system that can look at a photo of somebody doing an activity like swinging a tennis racket, and then be given a proposed new pose for that person, and be able to create a realistic image of them in that pose.

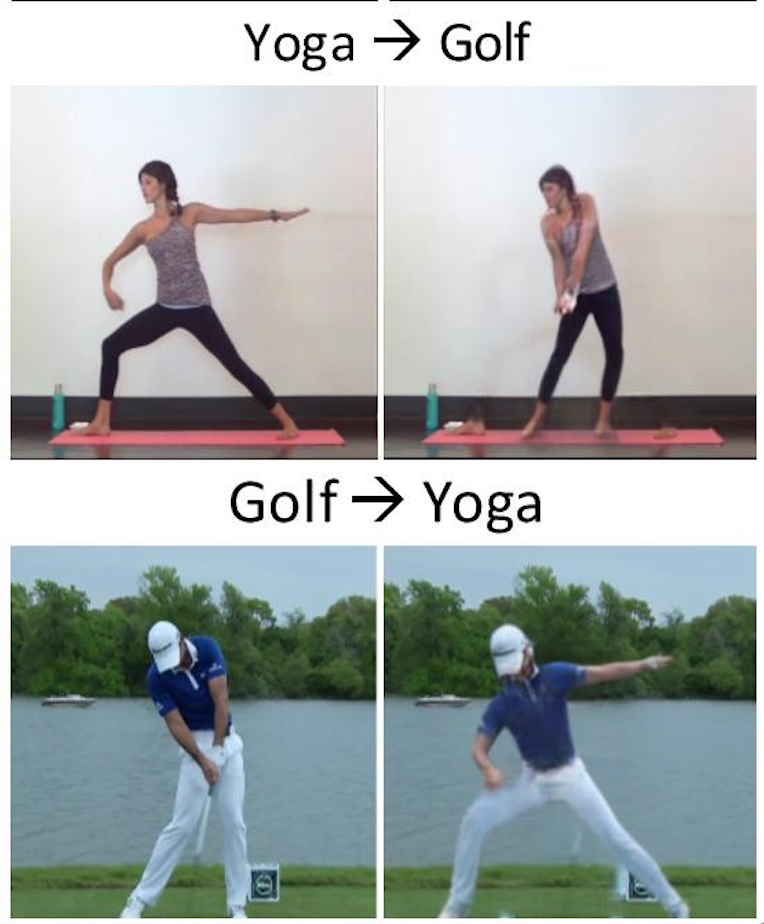

The system can do this not just within a specific activity - like taking a golfer at the beginning of his swing and fast-forwarding him to the end of it - but across different activities. For example, the system created a goofy photo of Barack Obama swinging a tennis racket on the golf course.

The researchers were surprised to find that the system could even take an image and create realistic videos of a given action - a task it wasn’t explicitly trained to do.

While some of these examples may seem silly, the team says that future versions of such systems could have many tangible uses, from helping self-driving cars predict future actions from different angles, to coaches helping their players literally see themselves using the correct form.

The work is part of the larger field of “view synthesis” focused on developing systems that can create unseen views of people.

One unsolved problem is that, since we obviously don’t have photos of every person in every pose, researchers have to develop algorithms that can create images of people in poses they’ve never seen before. This isn’t easy, given that new poses cause complex changes to shadows, occlusions and other aspects of an image that are difficult to smooth out.

“Most existing networks in this field create new images by takingjust take an image and essentially draw what they think the new view shouldit looks like,” says postdoc Guha Balakrishnan, the lead author on a new paper about the project. “You can think of our network as taking an existing image, cutting it up into parts and then moving those parts, to ensure that you’re getting an image that is based directly on the original.”

Specifically, one of the CSAIL team’s key innovations is to break the problem into a series of simpler “subtasks.” that may be different from one image to another. Their network first segments the source image into a background layer and multiple foreground layers that correspond to different body parts.

When the network is given a target pose, it then can essentially “move” the body parts to the particular target locations. The body parts are then fused together to create a new image, while the background is separately filled in. (This can be tricky, especially when there are visual gaps, shadows and occlusions that won’t appear in the final pose.)

“Once we split the task into subproblems, the network can tackle them as smaller challenges,” says co-author Amy Zhao, a graduate student at CSAIL. “Not only does this make the learning task easier, but it makes our model more interpretable, which means that researchers can debug it if it’s not working and can continue to improve upon it.”

Training the neural network on more than 250 videos of people doing yoga, tennis and golf, Balakrishnan says the team was somewhat surprised that the system could determine pose info just from the 2-D pose.

“The pose doesn’t say anything about how one arm should be above another, or anything like that,” he says. “All that ordering of body parts has to be done right.”

The fact that the system could generate videos even though it wasn’t trained to do so was also a positive sign for the new direction of research.

“We believe this can be attributed to how we move body layers to match the target pose, instead of previous work that has relied on the network to combine appearance and motion information from the input frame,” Balakrishnan says.

The system still has many wrinkles to iron out before it will be able to create new images that are hyper-realistic. (Anyone concerned about deepfakes needn’t worry.) It’s not yet sophisticated enough to recreate the rackets and golf clubs that humans are holding in the original images, and sometimes struggles with backgrounds and the more subtle nuances of hands and faces. It also currently requires that the person not turn their back to the camera, which poses problems for activities that involve spins and turns, like dancing or figure skating.

Moving forward, the team plans to update their model to explicitly focus on generating video, as well as seeing what they can do with information from 3-D poses rather than 2-D ones.

Balakrishnan co-wrote the paper with professors Fredo Durand and John Guttag, as well as Zhao and postdoc Adrian V. Dalca. They are presenting it this week at the Conference on Computer Vision and Pattern Recognition (CVPR) in Salt Lake City, Utah.