Scientists often train computers to learn in the same way that young children do - by setting them loose and letting them play by themselves. Kids interact with their environments, play games on their own, and gradually get better at doing things.

Many artificial intelligence (AI) systems tout their ability to “learn from scratch,” but this usually isn’t strictly true. Self-driving cars have intricate 3D maps of their surroundings, while systems like AlphaGo have information about the tile positions of the various game pieces. But what if an AI could be trained by imagining others’ states of mind?

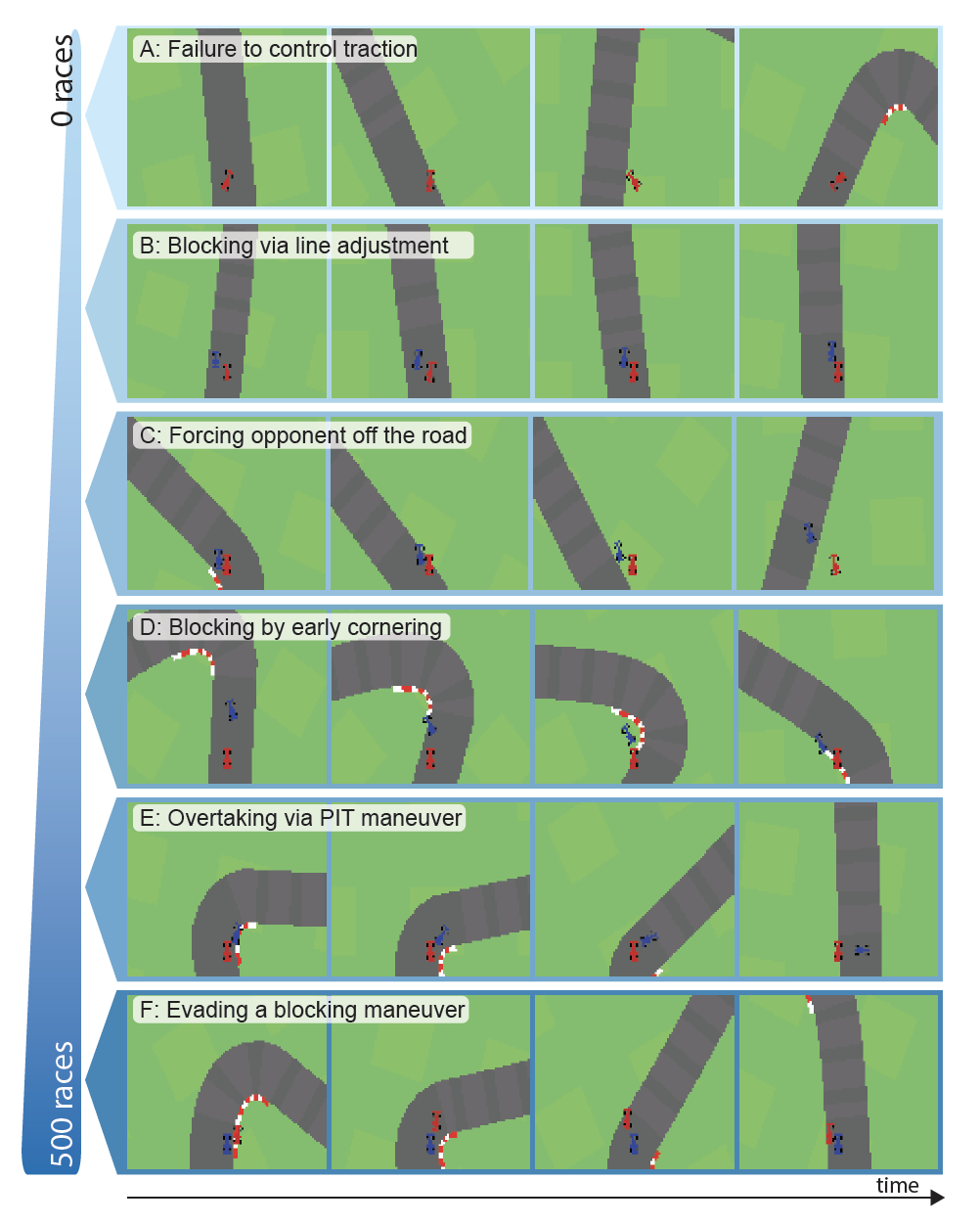

In a new paper, a team led by MIT computer scientists trained a neural network to learn NASCAR-style driving maneuvers purely from looking at a sequence of images taken from a two-person racing game. The network begins without knowing anything about cars, roads, or driving - and yet ultimately becomes able to do complex moves like overtaking an opponent on a turn and even forcing other cars off the road.

The project builds off an earlier paper that integrates tools from social psychology to classify driving behavior with respect to how selfish or selfless a particular driver is. While in this case the system learned more competition-oriented actions, the researchers say that it could also be used to learn more cooperative actions, such as negotiating with other drivers at intersections and merging in dense highway traffic.

The “Deep Latent Competition” (DLC) algorithm learns from interactions in simulated driving sequences purely based on visual observations. It uses its own learned response to different scenarios in order to predict the actions of the car it’s racing against.

“This method of ‘imagined self-play’ learns a model of the world and imagines the outcome of actions taken during races against opponents,” says graduate student Wilko Schwarting, co-lead author on a new paper on the project alongside graduate student Tim Seyde and research scientist Igor Gilitschenski. “By imagining interactions with other drivers, fewer interactions are necessary to become competitive.”

In simulations the team’s car won 2.3 times as many races as the other car, which was trained on a traditional “single-agent world model” which only incorporates how its own actions affect the world.

“The environment allows us to differentiate between skillful and competitive driving,” says MIT professor Daniela Rus, one of the senior authors on the paper. “While the former is the basis for high-performance racing, learning to beat a skillful opponent is a far greater challenge.”

Rus, Schwarting, Seyde and Gilitschenski co-wrote the paper alongside MIT professor Sertac Karaman, PhD student Lucas Liebenwein and graduate student Ryan Sander. The paper is being presented virtually this week at the annual Conference on Robot Learning (CoRL).

This project was supported in part by Qualcomm and the Toyota Research Institute.