The Proceedings of the ACM on Interactive, Mobile, Wearable, and Ubiquitous Technologies (IMWUT) Editorial Board has awarded MIT Computer Science and Artificial Intelligence Laboratory (CSAIL) and the Gwangju Institute of Science and Technology (GIST) researchers with a Distinguished Paper Award for their evaluation of visual explanations in autonomous vehicles’ decision-making.

Out of 205 papers published in IMWUT volume 7 last year, the team’s study, “What and When to Explain?: On-road Evaluation of Explanations in Highly Automated Vehicles” was one of eight awardees. Each year, this Distinguished Paper Award is given to novel and exemplary works that impact computing technology like mobile devices, wearables, and the Internet of Things.

The paper was authored by Daniela Rus, CSAIL Director and MIT Professor of EECS, and GIST researchers: Professor SeungJun Kim and PhD students Gwangbin Kim, Dohyeon Yeo, and TaeWoo Jo. Together, they implemented a framework that integrated augmented reality with machine learning to simulate vehicle perception and attention in actual vehicles on roads. The team conducted a user study on various safety visualizations, finding that timely and relevant displays of how vehicles perceive their surroundings and assess traffic risks increase user trust.

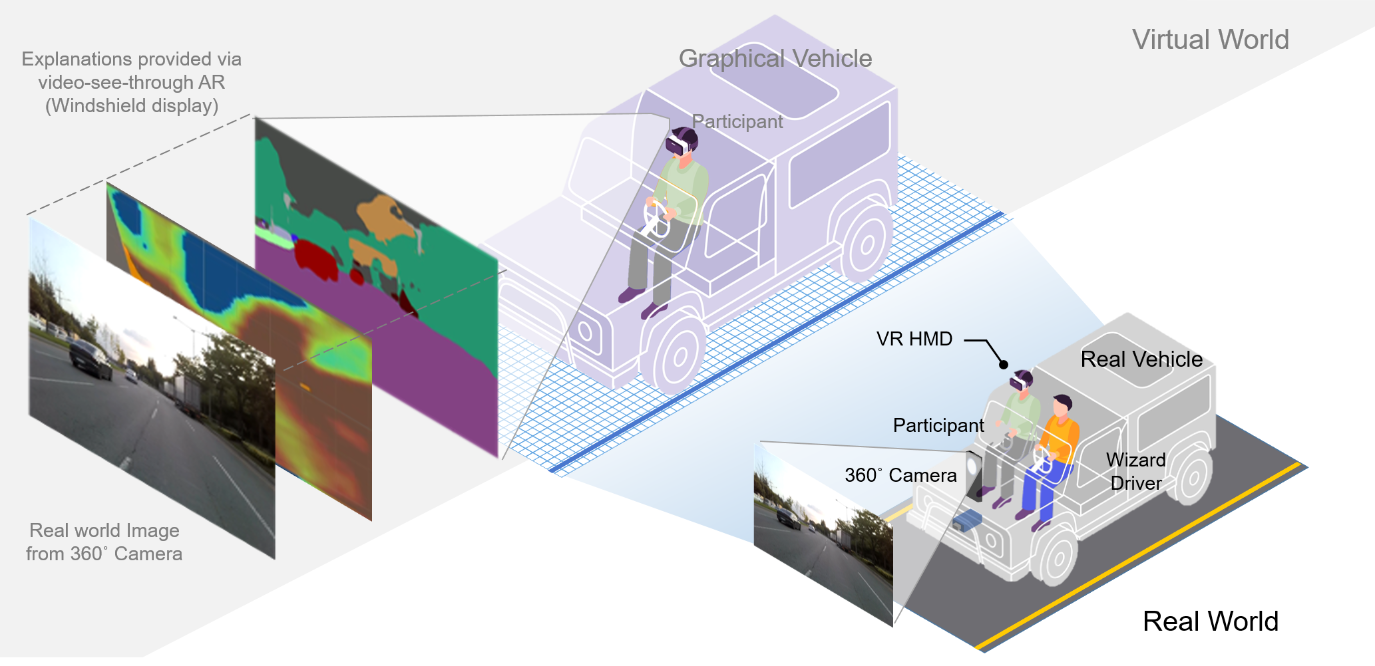

To do this, the team invited participants to partake in a mixed-reality self-driving car simulator with seven display scenarios. The scenarios included different types of windshield displays — perception, attention, and a combination of both — with options for continuous display or activation only when a risk was present. The "perception" display used an algorithm to visually segment objects like buses, bicycles, and pedestrians, while the "attention" display featured a heat map indicating hazardous areas, based on a 3D convolutional neural network trained on car crash data.

The study revealed that continuous perception displays reduced anxiety and increased trust in the autonomous vehicle, while a combination of perception and attention displays, shown occasionally, also enhanced users' confidence in the vehicle’s decisions. The team highlights that their framework can be adapted for other AI models, offering flexibility for various types of explanations, including verbal or textual descriptions. SeungJun Kim, Professor at GIST, noted, “This framework can be extended to safely evaluate explainable AI methods and their impact on passenger experience in real-world driving scenarios.”

The research was funded by a GIST-MIT Research Collaboration grant in 2023. The Distinguished Paper Award was announced at the UbiComp/ISWC 2024 Awards Ceremony, accompanied by a gala dinner, where the IMWUT Editorial Board praised the study: “This timely, comprehensive research investigates explainable AI in real-world vehicle contexts through VR-based user simulations, significantly enhancing user experience for self-driving technology.”