By attacking even black-box systems w/hidden information, MIT CSAIL students show that hackers can break the most advanced AIs that may someday appear in TSA security lines and self-driving cars.

Apps like Facebook often use neural networks for image recognition so that they can filter out inappropriate, violent, or lewd content. Groups like the TSA are even considering using them to detect suspicious objects in security lines.

But neural networks can easily be fooled into thinking that, say, a photo of a turtle is actually a gun. This can have major consequences: imagine if, simply by changing a few pixels, a bitter ex-boyfriend could put private photos up on Facebook, or a terrorist could disguise a bomb to evade detection.

According to a team from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL), such hacks are even easier to pull off than we thought. In a new paper, researchers developed a method of fooling neural networks that is up to 1000 times faster than existing approaches for so-called “black-box” settings (i.e. systems whose internal structures aren’t visible to the hacker).

“With a ‘white-box’ system you can look at the source code and how it’s weighing various factors, and from that, compute how much you need to change each pixel to fool the network,” says PhD candidate Anish Athalye, who was one of the lead authors on the new paper alongside master’s student Andrew Ilyas and undergraduates Logan Engstrom and Jessy Lin. “It’s much more difficult when you’re not privy to that kind of information, since you’re essentially in the dark about how it works.”

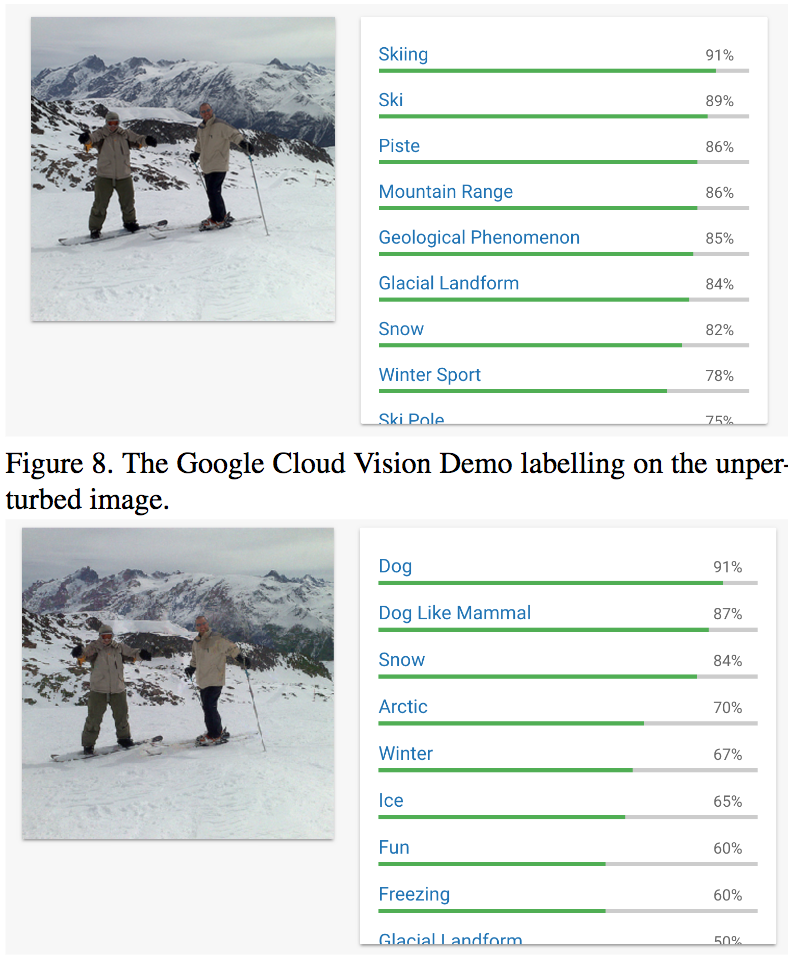

To test their method, the team showed that they could transform an image of a dog into a photo of two people skiing, all while the image-recognition system still classifies the image as a dog. (The team tested their method on Google’s Cloud Vision API, but say that it would work for similar APIs from Facebook and other companies.)

What’s especially impressive is that the researchers don’t even need complete information about what Google’s image-recognition system is “seeing.”

Usually when such systems analyze an image, they show you the exact percent probability that the image is any of many types of objects - i.e. a cat, a dog, a cup of coffee, etc.

“But with partial-information systems like Google’s [vision] API, if an attacker uploads an image and wants to see how the API classifies it, he can only see some hard-to-interpret ‘scores’ rather than actual probabilities,” says Ilyas. “We show that we can still fool the network with this basic information.”

One existing approach to fooling black-box systems has involved changing every single pixel one way or another and seeing how each change affects the classification. That’s manageable for 50-by-50-pixel thumbnails, but the method quickly becomes impractical for anything much larger than that.

The CSAIL team instead used an algorithm called a “natural evolution strategy” (NES). NES looks at a range of similar adversarial images and makes a change to the pixels of the image in the direction of similar objects.

Their approach switches between two actions. Using the dog-skier model, the system first changes specific pixels to make the network’s classification of the photo even more skewed towards “dog.” Then it changes the RGB values of the pixels to make the image physically look more and more like skiers.

The team’s paper is currently under submission for the annual Computer Vision and Pattern Recognition conference (CVPR), taking place in Utah in June.