This week a team from CSAIL’s computer vision group co-hosted the first Scene Parsing Challenge at the 2016 European Conference on Computer Vision (ECCV) in Amsterdam. The challenge was focused on scene recognition, and using data to enable algorithms to classify and segment objects in scenes.

Scene recognition has important applications in robotics and even psychology. Better algorithms could determine actions happening in a given environment, spot inconsistent objects or human behaviors, and even predict future events.

“This challenge creates a standard benchmark for model comparison and motivates researchers to propose stronger models with improved performances in scene parsing and classification.” said CSAIL PhD Bolei Zhou, who oversaw both of the scene understanding challenges.

The event was jointly held with the Large Scale Visual Recognition Challenge 2016. Among the other researchers who helped organize the challenge include PhD students Hang Zhao and Xavier Puig, MIT professor Antonio Torralba and professor Sanja Fidler from the University of Toronto.

Tackling the challenge

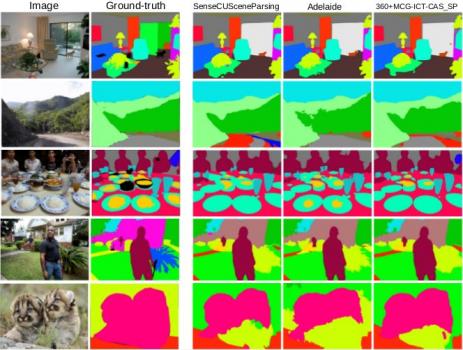

For the the scene parsing challenge, groups were given fully annotated images to train their algorithms on.

They were then tested on how well their algorithms segmented and classified pixels (of new photos), in terms of pixel accuracy and ratios of objects in the scene.

For example, in a photo of a building with trees and cars, the algorithm might segment the “building” image region with a 60% pixel accuracy, and 60 percent ratio of the total photo. The “car” image region might be a 30% pixel accuracy, at 30 percent, and the “tree” image region at 10% pixel accuracy with a 10 percent ratio of the total photo.

To train and test their algorithms participants used CSAIL’s ADE20K dataset of more than 22,000 photos, annotated with objects and parts. Algorithms were tested on 150 different semantic categories that include “sky”, “road”, “grass”, and more discrete object types like “person”, “car”, and “bed.”

From a total of 75 entries submitted by 22 teams from around the world, the winning team had a score of 0.57205, or 57 percent. In other words, for any given image the algorithm was accurate for more than half of the picture’s pixels.

Top ranked teams were invited to present their algorithms at ECCV’16 in Amsterdam, where more than two thousand researchers worldwide attended.

A live demo of scene parsing is here.

Places Scene Classification Challenge

The team, which included recent PhD graduate Aditya Khosla and principal research scientist Aude Oliva, simultaneously hosted the second “Places Scene Classification Challenge,” where the goal was to identify the scene category in a given photo.

For the challenge the team created the Places2 dataset, which has more than ten million images and over 400 distinct scene categories.

The algorithms produced a list of five possible categories, which were evaluated on the label that best matched the “human” assigned choice. Since many environments can be described using different words (i.e. a gym might also be “fitness center”), the idea was to allow the algorithm to identify multiple scene categories.

The winning team’s algorithms represented a marked improvement over previous algorithms for scene classification.

“In many ways, hosting a public challenge is as important as publishing seminal work,” said Oliva.

Scene classification example. A live demo is here.

In the field of deep learning, data has become the underpinning for making advances. Large labeled datasets such as Pascal, COCO and ImageNet have allowed machine learning algorithms to reach near-human semantic classification of visual patterns like objects and scenes.

“We released larger and richer data so that researchers could not only work together, but challenge each other and jointly push the slope of the field,” said Zhou. “It is like the Olympic Games in the computer vision field.”